['Air Programs']

['Air Quality']

06/06/2024

...

1. General Information

2. Quality System Requirements

3. Measurement Quality Check Requirements

4. Calculations for Data Quality Assessments

5. Reporting Requirements

6. References

1. General Information

1.1 Applicability.

(a) This appendix specifies the minimum quality assurance requirements for the control and assessment of the quality of the ambient air monitoring data submitted to a PSD reviewing authority or the EPA by an organization operating an air monitoring station, or network of stations, operated in order to comply with Part 51 New Source Review - Prevention of Significant Deterioration (PSD). Such organizations are encouraged to develop and maintain quality assurance programs more extensive than the required minimum. Additional guidance for the requirements reflected in this appendix can be found in the �Quality Assurance Handbook for Air Pollution Measurement Systems,� Volume II (Ambient Air) and �Quality Assurance Handbook for Air Pollution Measurement Systems,� Volume IV (Meteorological Measurements) and at a national level in references 1, 2, and 3 of this appendix.

(b) It is not assumed that data generated for PSD under this appendix will be used in making NAAQS decisions. However, if all the requirements in this appendix are followed (including the NPEP programs) and reported to AQS, with review and concurrence from the EPA region, data may be used for NAAQS decisions. With the exception of the NPEP programs (NPAP, PM2.5 PEP, Pb-PEP), for which implementation is at the discretion of the PSD reviewing authority, all other quality assurance and quality control requirements found in the appendix must be met.

1.2 PSD Primary Quality Assurance Organization (PQAO). A PSD PQAO is defined as a monitoring organization or a coordinated aggregation of such organizations that is responsible for a set of stations within one PSD reviewing authority that monitors the same pollutant and for which data quality assessments will be pooled. Each criteria pollutant sampler/monitor must be associated with only one PSD PQAO.

1.2.1 Each PSD PQAO shall be defined such that measurement uncertainty among all stations in the organization can be expected to be reasonably homogeneous, as a result of common factors. A PSD PQAO must be associated with only one PSD reviewing authority. Common factors that should be considered in defining PSD PQAOs include:

(a) Operation by a common team of field operators according to a common set of procedures;

(b) Use of a common QAPP and/or standard operating procedures;

(c) Common calibration facilities and standards;

(d) Oversight by a common quality assurance organization; and

(e) Support by a common management organization or laboratory.

1.2.2 PSD monitoring organizations having difficulty describing its PQAO or in assigning specific monitors to a PSD PQAO should consult with the PSD reviewing authority. Any consolidation of PSD PQAOs shall be subject to final approval by the PSD reviewing authority.

1.2.3 Each PSD PQAO is required to implement a quality system that provides sufficient information to assess the quality of the monitoring data. The quality system must, at a minimum, include the specific requirements described in this appendix. Failure to conduct or pass a required check or procedure, or a series of required checks or procedures, does not by itself invalidate data for regulatory decision making. Rather, PSD PQAOs and the PSD reviewing authority shall use the checks and procedures required in this appendix in combination with other data quality information, reports, and similar documentation that demonstrate overall compliance with parts 51, 52 and 58 of this chapter. Accordingly, the PSD reviewing authority shall use a �weight of evidence� approach when determining the suitability of data for regulatory decisions. The PSD reviewing authority reserves the authority to use or not use monitoring data submitted by a PSD monitoring organization when making regulatory decisions based on the PSD reviewing authority's assessment of the quality of the data. Generally, consensus built validation templates or validation criteria already approved in quality assurance project plans (QAPPs) should be used as the basis for the weight of evidence approach.

1.3 Definitions.

(a) Measurement Uncertainty. A term used to describe deviations from a true concentration or estimate that are related to the measurement process and not to spatial or temporal population attributes of the air being measured.

(b) Precision. A measurement of mutual agreement among individual measurements of the same property usually under prescribed similar conditions, expressed generally in terms of the standard deviation.

(c) Bias. The systematic or persistent distortion of a measurement process which causes errors in one direction.

(d) Accuracy. The degree of agreement between an observed value and an accepted reference value. Accuracy includes a combination of random error (imprecision) and systematic error (bias) components which are due to sampling and analytical operations.

(e) Completeness. A measure of the amount of valid data obtained from a measurement system compared to the amount that was expected to be obtained under correct, normal conditions.

(f) Detectability. The low critical range value of a characteristic that a method specific procedure can reliably discern.

1.4 Measurement Quality Check Reporting. The measurement quality checks described in section 3 of this appendix, are required to be submitted to the PSD reviewing authority within the same time frame as routinely-collected ambient concentration data as described in 40 CFR 58.16. The PSD reviewing authority may as well require that the measurement quality check data be reported to AQS.

1.5 Assessments and Reports. Periodic assessments and documentation of data quality are required to be reported to the PSD reviewing authority. To provide national uniformity in this assessment and reporting of data quality for all networks, specific assessment and reporting procedures are prescribed in detail in sections 3, 4, and 5 of this appendix.

2. Quality System Requirements

A quality system (reference 1 of this appendix) is the means by which an organization manages the quality of the monitoring information it produces in a systematic, organized manner. It provides a framework for planning, implementing, assessing and reporting work performed by an organization and for carrying out required quality assurance and quality control activities.

2.1 Quality Assurance Project Plans. All PSD PQAOs must develop a quality system that is described and approved in quality assurance project plans (QAPP) to ensure that the monitoring results:

(a) Meet a well-defined need, use, or purpose (reference 5 of this appendix);

(b) Provide data of adequate quality for the intended monitoring objectives;

(c) Satisfy stakeholder expectations;

(d) Comply with applicable standards specifications;

(e) Comply with statutory (and other legal) requirements; and

(f) Assure quality assurance and quality control adequacy and independence.

2.1.1 The QAPP is a formal document that describes these activities in sufficient detail and is supported by standard operating procedures. The QAPP must describe how the organization intends to control measurement uncertainty to an appropriate level in order to achieve the objectives for which the data are collected. The QAPP must be documented in accordance with EPA requirements (reference 3 of this appendix).

2.1.2 The PSD PQAO's quality system must have adequate resources both in personnel and funding to plan, implement, assess and report on the achievement of the requirements of this appendix and it's approved QAPP.

2.1.3 Incorporation of quality management plan (QMP) elements into the QAPP. The QMP describes the quality system in terms of the organizational structure, functional responsibilities of management and staff, lines of authority, and required interfaces for those planning, implementing, assessing and reporting activities involving environmental data operations (EDO). The PSD PQAOs may combine pertinent elements of the QMP into the QAPP rather than requiring the submission of both QMP and QAPP documents separately, with prior approval of the PSD reviewing authority. Additional guidance on QMPs can be found in reference 2 of this appendix.

2.2 Independence of Quality Assurance Management. The PSD PQAO must provide for a quality assurance management function for its PSD data collection operation, that aspect of the overall management system of the organization that determines and implements the quality policy defined in a PSD PQAO's QAPP. Quality management includes strategic planning, allocation of resources and other systematic planning activities (e.g., planning, implementation, assessing and reporting) pertaining to the quality system. The quality assurance management function must have sufficient technical expertise and management authority to conduct independent oversight and assure the implementation of the organization's quality system relative to the ambient air quality monitoring program and should be organizationally independent of environmental data generation activities.

2.3 Data Quality Performance Requirements.

2.3.1 Data Quality Objectives (DQOs). The DQOs, or the results of other systematic planning processes, are statements that define the appropriate type of data to collect and specify the tolerable levels of potential decision errors that will be used as a basis for establishing the quality and quantity of data needed to support air monitoring objectives (reference 5 of the appendix). The DQOs have been developed by the EPA to support attainment decisions for comparison to national ambient air quality standards (NAAQS). The PSD reviewing authority and the PSD monitoring organization will be jointly responsible for determining whether adherence to the EPA developed NAAQS DQOs specified in appendix A of this part are appropriate or if DQOs from a project-specific systematic planning process are necessary.

2.3.1.1 Measurement Uncertainty for Automated and Manual PM 2.5 Methods. The goal for acceptable measurement uncertainty for precision is defined as an upper 90 percent confidence limit for the coefficient of variation (CV) of 10 percent and plus or minus 10 percent for total bias.

2.3.1.2 Measurement Uncertainty for Automated Ozone Methods. The goal for acceptable measurement uncertainty is defined for precision as an upper 90 percent confidence limit for the CV of 7 percent and for bias as an upper 95 percent confidence limit for the absolute bias of 7 percent.

2.3.1.3 Measurement Uncertainty for Pb Methods. The goal for acceptable measurement uncertainty is defined for precision as an upper 90 percent confidence limit for the CV of 20 percent and for bias as an upper 95 percent confidence limit for the absolute bias of 15 percent.

2.3.1.4 Measurement Uncertainty for NO 2. The goal for acceptable measurement uncertainty is defined for precision as an upper 90 percent confidence limit for the CV of 15 percent and for bias as an upper 95 percent confidence limit for the absolute bias of 15 percent.

2.3.1.5 Measurement Uncertainty for SO 2. The goal for acceptable measurement uncertainty for precision is defined as an upper 90 percent confidence limit for the CV of 10 percent and for bias as an upper 95 percent confidence limit for the absolute bias of 10 percent.

2.4 National Performance Evaluation Program. Organizations operating PSD monitoring networks are required to implement the EPA's national performance evaluation program (NPEP) if the data will be used for NAAQS decisions and at the discretion of the PSD reviewing authority if PSD data are not used for NAAQS decisions. The NPEP includes the National Performance Audit Program (NPAP), the PM2.5 Performance Evaluation Program (PM2.5-PEP) and the Pb Performance Evaluation Program (Pb-PEP). The PSD QAPP shall provide for the implementation of NPEP including the provision of adequate resources for such NPEP if the data will be used for NAAQS decisions or if required by the PSD reviewing authority. Contact the PSD reviewing authority to determine the best procedure for implementing the audits which may include an audit by the PSD reviewing authority, a contractor certified for the activity, or through self-implementation which is described in sections below. A determination of which entity will be performing this audit program should be made as early as possible and during the QAPP development process. The PSD PQAOs, including contractors that plan to implement these programs on behalf of PSD PQAOs, that plan to implement these programs (self-implement) rather than use the federal programs, must meet the adequacy requirements found in the appropriate sections that follow, as well as meet the definition of independent assessment that follows.

2.4.1 Independent Assessment. An assessment performed by a qualified individual, group, or organization that is not part of the organization directly performing and accountable for the work being assessed. This auditing organization must not be involved with the generation of the routinely-collected ambient air monitoring data. An organization can conduct the performance evaluation (PE) if it can meet this definition and has a management structure that, at a minimum, will allow for the separation of its routine sampling personnel from its auditing personnel by two levels of management. In addition, the sample analysis of audit filters must be performed by a laboratory facility and laboratory equipment separate from the facilities used for routine sample analysis. Field and laboratory personnel will be required to meet the performance evaluation field and laboratory training and certification requirements. The PSD PQAO will be required to participate in the centralized field and laboratory standards certification and comparison processes to establish comparability to federally implemented programs.

2.5 Technical Systems Audit Program. The PSD reviewing authority or the EPA may conduct system audits of the ambient air monitoring programs or organizations operating PSD networks. The PSD monitoring organizations shall consult with the PSD reviewing authority to verify the schedule of any such technical systems audit. Systems audit programs are described in reference 10 of this appendix.

2.6 Gaseous and Flow Rate Audit Standards.

2.6.1 Gaseous pollutant concentration standards (permeation devices or cylinders of compressed gas) used to obtain test concentrations for CO, SO 2 , NO, and NO 2 must be EPA Protocol Gases certified in accordance with one of the procedures given in Reference 4 of this appendix.

2.6.1.1 The concentrations of EPA Protocol Gas standards used for ambient air monitoring must be certified with a 95-percent confidence interval to have an analytical uncertainty of no more than �2.0 percent (inclusive) of the certified concentration (tag value) of the gas mixture. The uncertainty must be calculated in accordance with the statistical procedures defined in Reference 4 of this appendix.

2.6.1.2 Specialty gas producers advertising certification with the procedures provided in Reference 4 of this appendix and distributing gases as �EPA Protocol Gas� for ambient air monitoring purposes must adhere to the regulatory requirements specified in 40 CFR 75.21(g) or not use �EPA� in any form of advertising. The PSD PQAOs must provide information to the PSD reviewing authority on the specialty gas producers they use (or will use) for the duration of the PSD monitoring project. This information can be provided in the QAPP or monitoring plan but must be updated if there is a change in the specialty gas producers used.

2.6.2 Test concentrations for ozone (O3) must be obtained in accordance with the ultraviolet photometric calibration procedure specified in appendix D to Part 50, and by means of a certified NIST-traceable O3 transfer standard. Consult references 7 and 8 of this appendix for guidance on transfer standards for O3.

2.6.3 Flow rate measurements must be made by a flow measuring instrument that is NIST-traceable to an authoritative volume or other applicable standard. Guidance for certifying some types of flow-meters is provided in reference 10 of this appendix.

2.7 Primary Requirements and Guidance. Requirements and guidance documents for developing the quality system are contained in references 1 through 11 of this appendix, which also contain many suggested procedures, checks, and control specifications. Reference 10 describes specific guidance for the development of a quality system for data collected for comparison to the NAAQS. Many specific quality control checks and specifications for methods are included in the respective reference methods described in Part 50 or in the respective equivalent method descriptions available from the EPA (reference 6 of this appendix). Similarly, quality control procedures related to specifically designated reference and equivalent method monitors are contained in the respective operation or instruction manuals associated with those monitors. For PSD monitoring, the use of reference and equivalent method monitors are required.

3. Measurement Quality Check Requirements

This section provides the requirements for PSD PQAOs to perform the measurement quality checks that can be used to assess data quality. Data from these checks are required to be submitted to the PSD reviewing authority within the same time frame as routinely-collected ambient concentration data as described in 40 CFR 58.16. Table B-1 of this appendix provides a summary of the types and frequency of the measurement quality checks that are described in this section. Reporting these results to AQS may be required by the PSD reviewing authority.

3.1 Gaseous monitors of SO 2, NO 2, O 3, and CO.

3.1.1 One-Point Quality Control (QC) Check for SO 2, NO 2, O 3, and CO. (a) A one-point QC check must be performed at least once every 2 weeks on each automated monitor used to measure SO2, NO2, O3 and CO. With the advent of automated calibration systems, more frequent checking is strongly encouraged and may be required by the PSD reviewing authority. See Reference 10 of this appendix for guidance on the review procedure. The QC check is made by challenging the monitor with a QC check gas of known concentration (effective concentration for open path monitors) between the prescribed range of 0.005 and 0.08 parts per million (ppm) for SO2, NO2, and O3, and between the prescribed range of 0.5 and 5 ppm for CO monitors. The QC check gas concentration selected within the prescribed range should be related to monitoring objectives for the monitor. If monitoring for trace level monitoring, the QC check concentration should be selected to represent the mean or median concentrations at the site. If the mean or median concentrations at trace gas sites are below the MDL of the instrument the agency can select the lowest concentration in the prescribed range that can be practically achieved. If the mean or median concentrations at trace gas sites are above the prescribed range the agency can select the highest concentration in the prescribed range. The PSD monitoring organization will consult with the PSD reviewing authority on the most appropriate one-point QC concentration based on the objectives of the monitoring activity. An additional QC check point is encouraged for those organizations that may have occasional high values or would like to confirm the monitors' linearity at the higher end of the operational range or around NAAQS concentrations. If monitoring for NAAQS decisions the QC concentration can be selected at a higher concentration within the prescribed range but should also consider precision points around mean or median concentrations.

(b) Point analyzers must operate in their normal sampling mode during the QC check and the test atmosphere must pass through all filters, scrubbers, conditioners and other components used during normal ambient sampling and as much of the ambient air inlet system as is practicable. The QC check must be conducted before any calibration or adjustment to the monitor.

(c) Open-path monitors are tested by inserting a test cell containing a QC check gas concentration into the optical measurement beam of the instrument. If possible, the normally used transmitter, receiver, and as appropriate, reflecting devices should be used during the test and the normal monitoring configuration of the instrument should be altered as little as possible to accommodate the test cell for the test. However, if permitted by the associated operation or instruction manual, an alternate local light source or an alternate optical path that does not include the normal atmospheric monitoring path may be used. The actual concentration of the QC check gas in the test cell must be selected to produce an effective concentration in the range specified earlier in this section. Generally, the QC test concentration measurement will be the sum of the atmospheric pollutant concentration and the QC test concentration. As such, the result must be corrected to remove the atmospheric concentration contribution. The corrected concentration is obtained by subtracting the average of the atmospheric concentrations measured by the open path instrument under test immediately before and immediately after the QC test from the QC check gas concentration measurement. If the difference between these before and after measurements is greater than 20 percent of the effective concentration of the test gas, discard the test result and repeat the test. If possible, open path monitors should be tested during periods when the atmospheric pollutant concentrations are relatively low and steady.

(d) Report the audit concentration of the QC gas and the corresponding measured concentration indicated by the monitor. The percent differences between these concentrations are used to assess the precision and bias of the monitoring data as described in sections 4.1.2 (precision) and 4.1.3 (bias) of this appendix.

3.1.2 Quarterly performance evaluation for SO 2, NO 2 , O 3 , or CO. Evaluate each primary monitor each monitoring quarter (or 90 day frequency) during which monitors are operated or a least once (if operated for less than one quarter). The quarterly performance evaluation (quarterly PE) must be performed by a qualified individual, group, or organization that is not part of the organization directly performing and accountable for the work being assessed. The person or entity performing the quarterly PE must not be involved with the generation of the routinely-collected ambient air monitoring data. A PSD monitoring organization can conduct the quarterly PE itself if it can meet this definition and has a management structure that, at a minimum, will allow for the separation of its routine sampling personnel from its auditing personnel by two levels of management. The quarterly PE also requires a set of equipment and standards independent from those used for routine calibrations or zero, span or precision checks.

3.1.2.1 The evaluation is made by challenging the monitor with audit gas standards of known concentration from at least three audit levels. One point must be within two to three times the method detection limit of the instruments within the PQAOs network, the second point will be less than or equal to the 99th percentile of the data at the site or the network of sites in the PQAO or the next highest audit concentration level. The third point can be around the primary NAAQS or the highest 3-year concentration at the site or the network of sites in the PQAO. An additional 4th level is encouraged for those PSD organizations that would like to confirm the monitor's linearity at the higher end of the operational range. In rare circumstances, there may be sites measuring concentrations above audit level 10. These sites should be identified to the PSD reviewing authority.

| Audit level | Concentration range, ppm | |||

|---|---|---|---|---|

| O3 | SO2 | NO2 | CO | |

| 1 | 0.004-0.0059 | 0.0003-0.0029 | 0.0003-0.0029 | 0.020-0.059 |

| 2 | 0.006-0.019 | 0.0030-0.0049 | 0.0030-0.0049 | 0.060-0.199 |

| 3 | 0.020-0.039 | 0.0050-0.0079 | 0.0050-0.0079 | 0.200-0.899 |

| 4 | 0.040-0.069 | 0.0080-0.0199 | 0.0080-0.0199 | 0.900-2.999 |

| 5 | 0.070-0.089 | 0.0200-0.0499 | 0.0200-0.0499 | 3.000-7.999 |

| 6 | 0.090-0.119 | 0.0500-0.0999 | 0.0500-0.0999 | 8.000-15.999 |

| 7 | 0.120-0.139 | 0.1000-0.1499 | 0.1000-0.2999 | 16.000-30.999 |

| 8 | 0.140-0.169 | 0.1500-0.2599 | 0.3000-0.4999 | 31.000-39.999 |

| 9 | 0.170-0.189 | 0.2600-0.7999 | 0.5000-0.7999 | 40.000-49.999 |

| 10 | 0.190-0.259 | 0.8000-1.000 | 0.8000-1.000 | 50.000-60.000 |

3.1.2.2 [Reserved]

3.1.2.3 The standards from which audit gas test concentrations are obtained must meet the specifications of section 2.6.1 of this appendix.

3.1.2.4 For point analyzers, the evaluation shall be carried out by allowing the monitor to analyze the audit gas test atmosphere in its normal sampling mode such that the test atmosphere passes through all filters, scrubbers, conditioners, and other sample inlet components used during normal ambient sampling and as much of the ambient air inlet system as is practicable.

3.1.2.5 Open-path monitors are evaluated by inserting a test cell containing the various audit gas concentrations into the optical measurement beam of the instrument. If possible, the normally used transmitter, receiver, and, as appropriate, reflecting devices should be used during the evaluation, and the normal monitoring configuration of the instrument should be modified as little as possible to accommodate the test cell for the evaluation. However, if permitted by the associated operation or instruction manual, an alternate local light source or an alternate optical path that does not include the normal atmospheric monitoring path may be used. The actual concentrations of the audit gas in the test cell must be selected to produce effective concentrations in the evaluation level ranges specified in this section of this appendix. Generally, each evaluation concentration measurement result will be the sum of the atmospheric pollutant concentration and the evaluation test concentration. As such, the result must be corrected to remove the atmospheric concentration contribution. The corrected concentration is obtained by subtracting the average of the atmospheric concentrations measured by the open-path instrument under test immediately before and immediately after the evaluation test (or preferably before and after each evaluation concentration level) from the evaluation concentration measurement. If the difference between the before and after measurements is greater than 20 percent of the effective concentration of the test gas standard, discard the test result for that concentration level and repeat the test for that level. If possible, open-path monitors should be evaluated during periods when the atmospheric pollutant concentrations are relatively low and steady. Also, if the open-path instrument is not installed in a permanent manner, the monitoring path length must be reverified to be within �3 percent to validate the evaluation, since the monitoring path length is critical to the determination of the effective concentration.

3.1.2.6 Report both the evaluation concentrations (effective concentrations for open-path monitors) of the audit gases and the corresponding measured concentration (corrected concentrations, if applicable, for open-path monitors) indicated or produced by the monitor being tested. The percent differences between these concentrations are used to assess the quality of the monitoring data as described in section 4.1.1 of this appendix.

3.1.3 National Performance Audit Program (NPAP). As stated in sections 1.1 and 2.4, PSD monitoring networks may be subject to the NPEP, which includes the NPAP. The NPAP is a performance evaluation which is a type of audit where quantitative data are collected independently in order to evaluate the proficiency of an analyst, monitoring instrument and laboratory. Due to the implementation approach used in this program, NPAP provides for a national independent assessment of performance with a consistent level of data quality. The NPAP should not be confused with the quarterly PE program described in section 3.1.2. The PSD organizations shall consult with the PSD reviewing authority or the EPA regarding whether the implementation of NPAP is required and the implementation options available. Details of the EPA NPAP can be found in reference 11 of this appendix. The program requirements include:

3.1.3.1 Performing audits on 100 percent of monitors and sites each year including monitors and sites that may be operated for less than 1 year. The PSD reviewing authority has the authority to require more frequent audits at sites they consider to be high priority.

3.1.3.2 Developing a delivery system that will allow for the audit concentration gasses to be introduced at the probe inlet where logistically feasible.

3.1.3.3 Using audit gases that are verified against the NIST standard reference methods or special review procedures and validated per the certification periods specified in Reference 4 of this appendix (EPA Traceability Protocol for Assay and Certification of Gaseous Calibration Standards) for CO, SO 2 , and NO 2 and using O 3 analyzers that are verified quarterly against a standard reference photometer.

3.1.3.4 The PSD PQAO may elect to self-implement NPAP. In these cases, the PSD reviewing authority will work with those PSD PQAOs to establish training and other technical requirements to establish comparability to federally implemented programs. In addition to meeting the requirements in sections 3.1.1.3 through 3.1.3.3, the PSD PQAO must:

(a) Ensure that the PSD audit system is equivalent to the EPA NPAP audit system and is an entirely separate set of equipment and standards from the equipment used for quarterly performance evaluations. If this system does not generate and analyze the audit concentrations, as the EPA NPAP system does, its equivalence to the EPA NPAP system must be proven to be as accurate under a full range of appropriate and varying conditions as described in section 3.1.3.6.

(b) Perform a whole system check by having the PSD audit system tested at an independent and qualified EPA lab, or equivalent.

(c) Evaluate the system with the EPA NPAP program through collocated auditing at an acceptable number of sites each year (at least one for a PSD network of five or less sites; at least two for a network with more than five sites).

(d) Incorporate the NPAP into the PSD PQAO's QAPP.

(e) Be subject to review by independent, EPA-trained personnel.

(f) Participate in initial and update training/certification sessions.

3.2 PM 2.5.

3.2.1 Flow Rate Verification for PM 2.5. A one-point flow rate verification check must be performed at least once every month (each verification minimally separated by 14 days) on each monitor used to measure PM2.5. The verification is made by checking the operational flow rate of the monitor. If the verification is made in conjunction with a flow rate adjustment, it must be made prior to such flow rate adjustment. For the standard procedure, use a flow rate transfer standard certified in accordance with section 2.6 of this appendix to check the monitor's normal flow rate. Care should be used in selecting and using the flow rate measurement device such that it does not alter the normal operating flow rate of the monitor. Flow rate verification results are to be reported to the PSD reviewing authority quarterly as described in section 5.1. Reporting these results to AQS is encouraged. The percent differences between the audit and measured flow rates are used to assess the bias of the monitoring data as described in section 4.2.2 of this appendix (using flow rates in lieu of concentrations).

3.2.2 Semi-Annual Flow Rate Audit for PM 2.5. Every 6 months, audit the flow rate of the PM2.5 particulate monitors. For short-term monitoring operations (those less than 1 year), the flow rate audits must occur at start up, at the midpoint, and near the completion of the monitoring project. The audit must be conducted by a trained technician other than the routine site operator. The audit is made by measuring the monitor's normal operating flow rate using a flow rate transfer standard certified in accordance with section 2.6 of this appendix. The flow rate standard used for auditing must not be the same flow rate standard used for verifications or to calibrate the monitor. However, both the calibration standard and the audit standard may be referenced to the same primary flow rate or volume standard. Care must be taken in auditing the flow rate to be certain that the flow measurement device does not alter the normal operating flow rate of the monitor. Report the audit flow rate of the transfer standard and the corresponding flow rate measured by the monitor. The percent differences between these flow rates are used to evaluate monitor performance.

3.2.3 Collocated Sampling Procedures for PM 2.5. A PSD PQAO must have at least one collocated monitor for each PSD monitoring network.

3.2.3.1 For each pair of collocated monitors, designate one sampler as the primary monitor whose concentrations will be used to report air quality for the site, and designate the other as the QC monitor. There can be only one primary monitor at a monitoring site for a given time period.

(a) If the primary monitor is a FRM, then the quality control monitor must be a FRM of the same method designation.

(b) If the primary monitor is a FEM, then the quality control monitor must be a FRM unless the PSD PQAO submits a waiver for this requirement, provides a specific reason why a FRM cannot be implemented, and the waiver is approved by the PSD reviewing authority. If the waiver is approved, then the quality control monitor must be the same method designation as the primary FEM monitor.

3.2.3.2 In addition, the collocated monitors should be deployed according to the following protocol:

(a) The collocated quality control monitor(s) should be deployed at sites with the highest predicted daily PM2.5 concentrations in the network. If the highest PM2.5 concentration site is impractical for collocation purposes, alternative sites approved by the PSD reviewing authority may be selected. If additional collocated sites are necessary, the PSD PQAO and the PSD reviewing authority should determine the appropriate location(s) based on data needs.

(b) The two collocated monitors must be within 4 meters of each other and at least 2 meters apart for flow rates greater than 200 liters/min or at least 1 meter apart for samplers having flow rates less than 200 liters/min to preclude airflow interference. A waiver allowing up to 10 meters horizontal distance and up to 3 meters vertical distance (inlet to inlet) between a primary and collocated quality control monitor may be approved by the PSD reviewing authority for sites at a neighborhood or larger scale of representation. This waiver may be approved during the QAPP review and approval process. Sampling and analytical methodologies must be the consistently implemented for both collocated samplers and for all other samplers in the network.

(c) Sample the collocated quality control monitor on a 6-day schedule for sites not requiring daily monitoring and on a 3-day schedule for any site requiring daily monitoring. Report the measurements from both primary and collocated quality control monitors at each collocated sampling site. The calculations for evaluating precision between the two collocated monitors are described in section 4.2.1 of this appendix.

3.2.4 PM 2.5 Performance Evaluation Program (PEP) Procedures. The PEP is an independent assessment used to estimate total measurement system bias. These evaluations will be performed under the NPEP as described in section 2.4 of this appendix or a comparable program. Performance evaluations will be performed annually within each PQAO. For PQAOs with less than or equal to five monitoring sites, five valid performance evaluation audits must be collected and reported each year. For PQAOs with greater than five monitoring sites, eight valid performance evaluation audits must be collected and reported each year. A valid performance evaluation audit means that both the primary monitor and PEP audit concentrations are valid and equal to or greater than 2 �g/m3. Siting of the PEP monitor must be consistent with section 3.2.3.4(c) of this appendix. However, any horizontal distance greater than 4 meters and any vertical distance greater than one meter must be reported to the EPA regional PEP coordinator. Additionally for every monitor designated as a primary monitor, a primary quality assurance organization must:

3.2.4.1 Have each method designation evaluated each year; and,

3.2.4.2 Have all FRM and FEM samplers subject to a PEP audit at least once every 6 years, which equates to approximately 15 percent of the monitoring sites audited each year.

3.2.4.3 Additional information concerning the PEP is contained in Reference 10 of this appendix. The calculations for evaluating bias between the primary monitor and the performance evaluation monitor for PM 2.5 are described in section 4.2.5 of this appendix.

3.3 PM 10.

3.3.1 Flow Rate Verification for PM 10. A one-point flow rate verification check must be performed at least once every month (each verification minimally separated by 14 days) on each monitor used to measure PM10. The verification is made by checking the operational flow rate of the monitor. If the verification is made in conjunction with a flow rate adjustment, it must be made prior to such flow rate adjustment. For the standard procedure, use a flow rate transfer standard certified in accordance with section 2.6 of this appendix to check the monitor's normal flow rate. Care should be taken in selecting and using the flow rate measurement device such that it does not alter the normal operating flow rate of the monitor. The percent differences between the audit and measured flow rates are used to assess the bias of the monitoring data as described in section 4.2.2 of this appendix (using flow rates in lieu of concentrations).

3.3.2 Semi-Annual Flow Rate Audit for PM 10. Every 6 months, audit the flow rate of the PM10 particulate monitors. For short-term monitoring operations (those less than 1 year), the flow rate audits must occur at start up, at the midpoint, and near the completion of the monitoring project. Where possible, the EPA strongly encourages more frequent auditing. The audit must be conducted by a trained technician other than the routine site operator. The audit is made by measuring the monitor's normal operating flow rate using a flow rate transfer standard certified in accordance with section 2.6 of this appendix. The flow rate standard used for auditing must not be the same flow rate standard used for verifications or to calibrate the monitor. However, both the calibration standard and the audit standard may be referenced to the same primary flow rate or volume standard. Care must be taken in auditing the flow rate to be certain that the flow measurement device does not alter the normal operating flow rate of the monitor. Report the audit flow rate of the transfer standard and the corresponding flow rate measured by the monitor. The percent differences between these flow rates are used to evaluate monitor performance

3.3.3 Collocated Sampling Procedures for Manual PM 10. A PSD PQAO must have at least one collocated monitor for each PSD monitoring network.

3.3.3.1 For each pair of collocated monitors, designate one sampler as the primary monitor whose concentrations will be used to report air quality for the site, and designate the other as the quality control monitor.

3.3.3.2 In addition, the collocated monitors should be deployed according to the following protocol:

(a) The collocated quality control monitor(s) should be deployed at sites with the highest predicted daily PM10 concentrations in the network. If the highest PM10 concentration site is impractical for collocation purposes, alternative sites approved by the PSD reviewing authority may be selected.

(b) The two collocated monitors must be within 4 meters of each other and at least 2 meters apart for flow rates greater than 200 liters/min or at least 1 meter apart for samplers having flow rates less than 200 liters/min to preclude airflow interference. A waiver allowing up to 10 meters horizontal distance and up to 3 meters vertical distance (inlet to inlet) between a primary and collocated sampler may be approved by the PSD reviewing authority for sites at a neighborhood or larger scale of representation. This waiver may be approved during the QAPP review and approval process. Sampling and analytical methodologies must be the consistently implemented for both collocated samplers and for all other samplers in the network.

(c) Sample the collocated quality control monitor on a 6-day schedule or 3-day schedule for any site requiring daily monitoring. Report the measurements from both primary and collocated quality control monitors at each collocated sampling site. The calculations for evaluating precision between the two collocated monitors are described in section 4.2.1 of this appendix.

(d) In determining the number of collocated sites required for PM10, PSD monitoring networks for Pb-PM10 should be treated independently from networks for particulate matter (PM), even though the separate networks may share one or more common samplers. However, a single quality control monitor that meets the collocation requirements for Pb-PM10 and PM10 may serve as a collocated quality control monitor for both networks. Extreme care must be taken if using the filter from a quality control monitor for both PM10 and Pb analysis. PM10 filter weighing should occur prior to any Pb analysis.

3.4 Pb.

3.4.1 Flow Rate Verification for Pb. A one-point flow rate verification check must be performed at least once every month (each verification minimally separated by 14 days) on each monitor used to measure Pb. The verification is made by checking the operational flow rate of the monitor. If the verification is made in conjunction with a flow rate adjustment, it must be made prior to such flow rate adjustment. Use a flow rate transfer standard certified in accordance with section 2.6 of this appendix to check the monitor's normal flow rate. Care should be taken in selecting and using the flow rate measurement device such that it does not alter the normal operating flow rate of the monitor. The percent differences between the audit and measured flow rates are used to assess the bias of the monitoring data as described in section 4.2.2 of this appendix (using flow rates in lieu of concentrations).

3.4.2 Semi-Annual Flow Rate Audit for Pb. Every 6 months, audit the flow rate of the Pb particulate monitors. For short-term monitoring operations (those less than 1 year), the flow rate audits must occur at start up, at the midpoint, and near the completion of the monitoring project. Where possible, the EPA strongly encourages more frequent auditing. The audit must be conducted by a trained technician other than the routine site operator. The audit is made by measuring the monitor's normal operating flow rate using a flow rate transfer standard certified in accordance with section 2.6 of this appendix. The flow rate standard used for auditing must not be the same flow rate standard used to in verifications or to calibrate the monitor. However, both the calibration standard and the audit standard may be referenced to the same primary flow rate or volume standard. Great care must be taken in auditing the flow rate to be certain that the flow measurement device does not alter the normal operating flow rate of the monitor. Report the audit flow rate of the transfer standard and the corresponding flow rate measured by the monitor. The percent differences between these flow rates are used to evaluate monitor performance.

3.4.3 Collocated Sampling for Pb. A PSD PQAO must have at least one collocated monitor for each PSD monitoring network.

3.4.3.1 For each pair of collocated monitors, designate one sampler as the primary monitor whose concentrations will be used to report air quality for the site, and designate the other as the quality control monitor.

3.4.3.2 In addition, the collocated monitors should be deployed according to the following protocol:

(a) The collocated quality control monitor(s) should be deployed at sites with the highest predicted daily Pb concentrations in the network. If the highest Pb concentration site is impractical for collocation purposes, alternative sites approved by the PSD reviewing authority may be selected.

(b) The two collocated monitors must be within 4 meters of each other and at least 2 meters apart for flow rates greater than 200 liters/min or at least 1 meter apart for samplers having flow rates less than 200 liters/min to preclude airflow interference. A waiver allowing up to 10 meters horizontal distance and up to 3 meters vertical distance (inlet to inlet) between a primary and collocated sampler may be approved by the PSD reviewing authority for sites at a neighborhood or larger scale of representation. This waiver may be approved during the QAPP review and approval process. Sampling and analytical methodologies must be the consistently implemented for both collocated samplers and all other samplers in the network.

(c) Sample the collocated quality control monitor on a 6-day schedule if daily monitoring is not required or 3-day schedule for any site requiring daily monitoring. Report the measurements from both primary and collocated quality control monitors at each collocated sampling site. The calculations for evaluating precision between the two collocated monitors are described in section 4.2.1 of this appendix.

(d) In determining the number of collocated sites required for Pb-PM10, PSD monitoring networks for PM10 should be treated independently from networks for Pb-PM10, even though the separate networks may share one or more common samplers. However, a single quality control monitor that meets the collocation requirements for Pb-PM10 and PM10 may serve as a collocated quality control monitor for both networks. Extreme care must be taken if using a using the filter from a quality control monitor for both PM10 and Pb analysis. The PM10 filter weighing should occur prior to any Pb analysis.

3.4.4 Pb Analysis Audits. Each calendar quarter, audit the Pb reference or equivalent method analytical procedure using filters containing a known quantity of Pb. These audit filters are prepared by depositing a Pb standard on unexposed filters and allowing them to dry thoroughly. The audit samples must be prepared using batches of reagents different from those used to calibrate the Pb analytical equipment being audited. Prepare audit samples in the following concentration ranges:

| Range | Equivalent ambient Pb concentration, �g/m 3 |

|---|---|

| 1 | 30-100% of Pb NAAQS. |

| 2 | 200-300% of Pb NAAQS. |

(a) Audit samples must be extracted using the same extraction procedure used for exposed filters.

(b) Analyze three audit samples in each of the two ranges each quarter samples are analyzed. The audit sample analyses shall be distributed as much as possible over the entire calendar quarter.

(c) Report the audit concentrations (in �g Pb/filter or strip) and the corresponding measured concentrations (in �g Pb/filter or strip) using AQS unit code 077 (if reporting to AQS). The percent differences between the concentrations are used to calculate analytical accuracy as described in section 4.2.5 of this appendix.

3.4.5 Pb Performance Evaluation Program (PEP) Procedures. As stated in sections 1.1 and 2.4, PSD monitoring networks may be subject to the NPEP, which includes the Pb PEP. The PSD monitoring organizations shall consult with the PSD reviewing authority or the EPA regarding whether the implementation of Pb-PEP is required and the implementation options available for the Pb-PEP. The PEP is an independent assessment used to estimate total measurement system bias. Each year, one PE audit must be performed at one Pb site in each PSD PQAO network that has less than or equal to five sites and two audits for PSD PQAO networks with greater than five sites. In addition, each year, four collocated samples from PSD PQAO networks with less than or equal to five sites and six collocated samples from PSD PQAO networks with greater than five sites must be sent to an independent laboratory for analysis. The calculations for evaluating bias between the primary monitor and the PE monitor for Pb are described in section 4.2.4 of this appendix.

4. Calculations for Data Quality Assessments

(a) Calculations of measurement uncertainty are carried out by PSD PQAO according to the following procedures. The PSD PQAOs should report the data for all appropriate measurement quality checks as specified in this appendix even though they may elect to perform some or all of the calculations in this section on their own.

(b) At low concentrations, agreement between the measurements of collocated samplers, expressed as relative percent difference or percent difference, may be relatively poor. For this reason, collocated measurement pairs will be selected for use in the precision and bias calculations only when both measurements are equal to or above the following limits:

(1) Pb: 0.002 �g/m 3 (Methods approved after 3/04/2010, with exception of manual equivalent method EQLA-0813-803).

(2) Pb: 0.02 �g/m 3 (Methods approved before 3/04/2010, and manual equivalent method EQLA-0813-803).

(3) PM10 (Hi-Vol): 15 �g/m 3.

(4) PM10 (Lo-Vol): 3 �g/m 3.

(5) PM2.5: 3 �g/m 3.

(c) The PM2.5 3 �g/m 3 limit for the PM2.5?PEP may be superseded by mutual agreement between the PSD PQAO and the PSD reviewing authority as specified in section 3.2.4 of the appendix and detailed in the approved QAPP.

4.1 Statistics for the Assessment of QC Checks for SO 2 , NO 2 , O3 and CO.

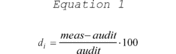

4.1.1 Percent Difference. Many of the measurement quality checks start with a comparison of an audit concentration or value (flow-rate) to the concentration/value measured by the monitor and use percent difference as the comparison statistic as described in equation 1 of this section. For each single point check, calculate the percent difference, d i, as follows:

where meas is the concentration indicated by the PQAO's instrument and audit is the audit concentration of the standard used in the QC check being measured.

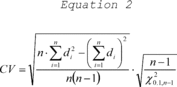

4.1.2 Precision Estimate. The precision estimate is used to assess the one-point QC checks for SO2, NO2, O3, or CO described in section 3.1.1 of this appendix. The precision estimator is the coefficient of variation upper bound and is calculated using equation 2 of this section:

where n is the number of single point checks being aggregated; X 2 0.1,n-1 is the 10th percentile of a chi-squared distribution with n-1 degrees of freedom.

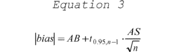

4.1.3 Bias Estimate. The bias estimate is calculated using the one-point QC checks for SO2, NO2, O3, or CO described in section 3.1.1 of this appendix. The bias estimator is an upper bound on the mean absolute value of the percent differences as described in equation 3 of this section:

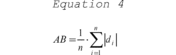

where n is the number of single point checks being aggregated; t0.95,n-1 is the 95th quantile of a t-distribution with n-1 degrees of freedom; the quantity AB is the mean of the absolute values of the d i?s and is calculated using equation 4 of this section:

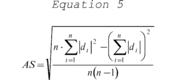

and the quantity AS is the standard deviation of the absolute value of the d i?s and is calculated using equation 5 of this section:

4.1.3.1 Assigning a sign (positive/negative) to the bias estimate. Since the bias statistic as calculated in equation 3 of this appendix uses absolute values, it does not have a tendency (negative or positive bias) associated with it. A sign will be designated by rank ordering the percent differences of the QC check samples from a given site for a particular assessment interval.

4.1.3.2 Calculate the 25th and 75th percentiles of the percent differences for each site. The absolute bias upper bound should be flagged as positive if both percentiles are positive and negative if both percentiles are negative. The absolute bias upper bound would not be flagged if the 25th and 75th percentiles are of different signs.

4.2 Statistics for the Assessment of PM 10, PM 2.5 , and Pb.

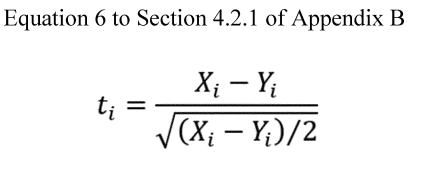

4.2.1 Collocated Quality Control Sampler Precision Estimate for PM 10, PM 2.5 , and Pb . Precision is estimated via duplicate measurements from collocated samplers. It is recommended that the precision be aggregated at the PQAO level quarterly, annually, and at the 3-year level. The data pair would only be considered valid if both concentrations are greater than or equal to the minimum values specified in section 4(c) of this appendix. For each collocated data pair, calculate t i , using equation 6 to this appendix:

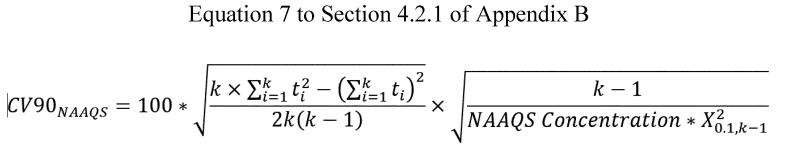

Where X i is the concentration from the primary sampler and Y i is the concentration value from the audit sampler. The coefficient of variation upper bound is calculated using equation 7 to this appendix:

Where k is the number of valid data pairs being aggregated, and X 2 0.1,k-1 is the 10th percentile of a chi-squared distribution with k-1 degrees of freedom. The factor of 2 in the denominator adjusts for the fact that each t i is calculated from two values with error.

4.2.2 One-Point Flow Rate Verification Bias Estimate for PM 10 , PM 2.5 and Pb. For each one-point flow rate verification, calculate the percent difference in volume using equation 1 of this appendix where meas is the value indicated by the sampler's volume measurement and audit is the actual volume indicated by the auditing flow meter. The absolute volume bias upper bound is then calculated using equation 3, where n is the number of flow rate audits being aggregated; t0.95,n-1 is the 95th quantile of a t-distribution with n-1 degrees of freedom, the quantity AB is the mean of the absolute values of the d i?s and is calculated using equation 4 of this appendix, and the quantity AS in equation 3 of this appendix is the standard deviation of the absolute values if the d i?s and is calculated using equation 5 of this appendix.

4.2.3 Semi-Annual Flow Rate Audit Bias Estimate for PM 10 , PM 2.5 and Pb. Use the same procedure described in section 4.2.2 for the evaluation of flow rate audits.

4.2.4 Performance Evaluation Programs Bias Estimate for Pb. The Pb bias estimate is calculated using the paired routine and the PEP monitor as described in section 3.4.5. Use the same procedures as described in section 4.1.3 of this appendix.

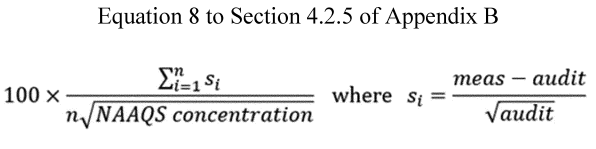

4.2.5 Performance Evaluation Programs Bias Estimate for PM 2.5 . The bias estimate is calculated using the PEP audits described in section 3.2.4. of this appendix. The bias estimator is based on, s i , the absolute difference in concentrations divided by the square root of the PEP concentration.

4.2.6 Pb Analysis Audit Bias Estimate. The bias estimate is calculated using the analysis audit data described in section 3.4.4. Use the same bias estimate procedure as described in section 4.1.3 of this appendix.

5. Reporting Requirements

5.1. Quarterly Reports. For each quarter, each PSD PQAO shall report to the PSD reviewing authority (and AQS if required by the PSD reviewing authority) the results of all valid measurement quality checks it has carried out during the quarter. The quarterly reports must be submitted consistent with the data reporting requirements specified for air quality data as set forth in 40 CFR 58.16 and pertain to PSD monitoring.

6. References

(1) American National Standard Institute�Quality Management Systems For Environmental Information And Technology Programs�Requirements With Guidance For Use. ASQ/ANSI E4�2014. February 2014. Available from ANSI Webstore https://webstore.ansi.org/.

(2) EPA Requirements for Quality Management Plans. EPA QA/R-2. EPA/240/B-01/002. March 2001, Reissue May 2006. Office of Environmental Information, Washington, DC 20460. http://www.epa.gov/quality/agency-wide-quality-system-documents.

(3) EPA Requirements for Quality Assurance Project Plans for Environmental Data Operations. EPA QA/R-5. EPA/240/B-01/003. March 2001, Reissue May 2006. Office of Environmental Information, Washington, DC 20460. http://www.epa.gov/quality/agency-wide-quality-system-documents.

(4) EPA Traceability Protocol for Assay and Certification of Gaseous Calibration Standards. EPA�600/R�12/531. May, 2012. Available from U.S. Environmental Protection Agency, National Risk Management Research Laboratory, Research Triangle Park NC 27711. https://www.epa.gov/nscep.

(5) Guidance for the Data Quality Objectives Process. EPA QA/G-4. EPA/240/B-06/001. February, 2006. Office of Environmental Information, Washington, DC 20460. http://www.epa.gov/quality/agency-wide-quality-system-documents.

(6) List of Designated Reference and Equivalent Methods. Available from U.S. Environmental Protection Agency, Center for Environmental Measurements and Modeling, Air Methods and Characterization Division, MD�D205�03, Research Triangle Park, NC 27711. https://www.epa.gov/amtic/air-monitoring-methods-criteria-pollutants.

(7) Transfer Standards for the Calibration of Ambient Air Monitoring Analyzers for Ozone. EPA�454/B�13�004 U.S. Environmental Protection Agency, Research Triangle Park, NC 27711, October, 2013. https://www.epa.gov/sites/default/files/2020-09/documents/ozonetransferstandardguidance.pdf.

(8) Paur, R.J. and F.F. McElroy. Technical Assistance Document for the Calibration of Ambient Ozone Monitors. EPA-600/4-79-057. U.S. Environmental Protection Agency, Research Triangle Park, NC 27711, September, 1979. http://www.epa.gov/ttn/amtic/cpreldoc.html.

(9) Quality Assurance Handbook for Air Pollution Measurement Systems, Volume 1�A Field Guide to Environmental Quality Assurance. EPA�600/R�94/038a. April 1994. Available from U.S. Environmental Protection Agency, ORD Publications Office, Center for Environmental Research Information (CERI), 26 W. Martin Luther King Drive, Cincinnati, OH 45268. https://www.epa.gov/amtic/ambient-air-monitoring-quality-assurance#documents.

(10) Quality Assurance Handbook for Air Pollution Measurement Systems, Volume II: Ambient Air Quality Monitoring Program Quality System Development. EPA�454/B�13�003. https://www.epa.gov/amtic/ambient-air-monitoring-quality-assurance#documents.

(11) National Performance Evaluation Program Standard Operating Procedures. https://www.epa.gov/amtic/ambient-air-monitoring-quality-assurance#npep.

| Method | Assessment method | Coverage | Minimum frequency | Parameters reported | AQS Assessment type |

|---|---|---|---|---|---|

| 1 Effective concentration for open path analyzers. | |||||

| 2 Corrected concentration, if applicable for open path analyzers. | |||||

| 3 NPAP, PM 2.5 , PEP, and Pb-PEP must be implemented if data is used for NAAQS decisions otherwise implementation is at PSD reviewing authority discretion. | |||||

| 4 Both primary and collocated sampler values are reported as raw data | |||||

| 5 A maximum number of days should be between these checks to ensure the checks are routinely conducted over time and to limit data impacts resulting from a failed check. | |||||

| Gaseous Methods (CO, NO 2 , SO 2 , O 3): | |||||

| One-Point QC for SO 2 , NO 2 , O 3 , CO | Response check at concentration 0.005�0.08 ppm SO 2 , NO 2 , O 3 , & 0.5 and 5 ppm CO | Each analyzer | Once per 2 weeks 5 | Audit concentration 1 and measured concentration 2 | One-Point QC. |

| Quarterly performance evaluation for SO 2 , NO 2 , O 3 , CO | See section 3.1.2 of this appendix | Each analyzer | Once per quarter 5 | Audit concentration 1 and measured concentration 2 for each level | Annual PE. |

| NPAP for SO 2 , NO 2 , O 3 , CO 3 | Independent Audit | Each primary monitor | Once per year | Audit concentration 1 and measured concentration 2 for each level | NPAP. |

| Particulate Methods: | |||||

| Collocated sampling PM 10 , PM 2.5 , Pb | Collocated samplers | 1 per PSD Network per pollutant | Every 6 days or every 3 days if daily monitoring required | Primary sampler concentration and duplicate sampler concentration 4 | No Transaction reported as raw data. |

| Flow rate verification PM 10 , PM 2.5 , Pb | Check of sampler flow rate | Each sampler | Once every month 5 | Audit flow rate and measured flow rate indicated by the sampler | Flow Rate Verification. |

| Semi-annual flow rate audit PM 10 , PM 2.5 , Pb | Check of sampler flow rate using independent standard | Each sampler | Once every 6 months or beginning, middle and end of monitoring 5 | Audit flow rate and measured flow rate indicated by the sampler | Semi Annual Flow Rate Audit. |

| Pb analysis audits Pb-TSP, Pb-PM 10 | Check of analytical system with Pb audit strips/filters | Analytical | Each quarter 5 | Measured value and audit value (ug Pb/filter) using AQS unit code 077 for parameters: 14129�Pb (TSP) LC FRM/FEM 85129�Pb (TSP) LC Non-FRM/FEM. | Pb Analysis Audits. |

| Performance Evaluation Program PM 2.5 3 | Collocated samplers | (1) 5 valid audits for PQAOs with <= 5 sites. (2) 8 valid audits for PQAOs with > 5 sites. (3) All samplers in 6 years | Over all 4 quarters 5 | Primary sampler concentration and performance evaluation sampler concentration | PEP. |

| Performance Evaluation Program Pb 3 | Collocated samplers | (1) 1 valid audit and 4 collocated samples for PQAOs, with <=5 sites. (2) 2 valid audits and 6 collocated samples for PQAOs with >5 sites. | Over all 4 quarters 5 | Primary sampler concentration and performance evaluation sampler concentration. Primary sampler concentration and duplicate sampler concentration | PEP. |

[81 FR 17290, Mar. 28, 2016; 89 FR 16392, March 6, 2024]

['Air Programs']

['Air Quality']

UPGRADE TO CONTINUE READING

Load More

J. J. Keller is the trusted source for DOT / Transportation, OSHA / Workplace Safety, Human Resources, Construction Safety and Hazmat / Hazardous Materials regulation compliance products and services. J. J. Keller helps you increase safety awareness, reduce risk, follow best practices, improve safety training, and stay current with changing regulations.

Copyright 2026 J. J. Keller & Associate, Inc. For re-use options please contact copyright@jjkeller.com or call 800-558-5011.