['Air Programs']

['Air Quality']

05/13/2025

...

Authority: 42 U.S.C. 7403, 7405, 7410, 7414, 7601, 7611, 7614, and 7619.

Subpart A - General Provisions

§58.1 Definitions.

As used in this part, all terms not defined herein have the meaning given them in the Clean Air Act.

AADT means the annual average daily traffic.

Act means the Clean Air Act as amended (42 U.S.C. 7401, et seq.)

Additive and multiplicative bias means the linear regression intercept and slope of a linear plot fitted to corresponding candidate and reference method mean measurement data pairs.

Administrator means the Administrator of the Environmental Protection Agency (EPA) or his or her authorized representative.

Air quality system (AQS) means the EPA's computerized system for storing and reporting of information relating to ambient air quality data.

Approved regional method (ARM) means a continuous PM2.5 method that has been approved specifically within a state or local air monitoring network for purposes of comparison to the NAAQS and to meet other monitoring objectives.

AQCR means air quality control region.

Area-wide means all monitors sited at neighborhood, urban, and regional scales, as well as those monitors sited at either micro- or middle-scale that are representative of many such locations in the same CBSA.

Certifying agency means a state, local, or tribal agency responsible for meeting the data certification requirements in accordance with §58.15 for a unique set of monitors.

Chemical Speciation Network (CSN) includes Speciation Trends Network stations (STN) as specified in paragraph 4.7.4 of appendix D of this part and supplemental speciation stations that provide chemical species data of fine particulate.

CO means carbon monoxide.

Combined statistical area (CSA) is defined by the U.S. Office of Management and Budget as a geographical area consisting of two or more adjacent Core Based Statistical Areas (CBSA) with employment interchange of at least 15 percent. Combination is automatic if the employment interchange is 25 percent and determined by local opinion if more than 15 but less than 25 percent.

Core-based statistical area (CBSA) is defined by the U.S. Office of Management and Budget, as a statistical geographic entity consisting of the county or counties associated with at least one urbanized area/urban cluster of at least 10,000 population, plus adjacent counties having a high degree of social and economic integration. Metropolitan Statistical Areas (MSAs) and micropolitan statistical areas are the two categories of CBSA (metropolitan areas have populations greater than 50,000; and micropolitan areas have populations between 10,000 and 50,000). In the case of very large cities where two or more CBSAs are combined, these larger areas are referred to as combined statistical areas (CSAs)

Corrected concentration pertains to the result of an accuracy or precision assessment test of an open path analyzer in which a high-concentration test or audit standard gas contained in a short test cell is inserted into the optical measurement beam of the instrument. When the pollutant concentration measured by the analyzer in such a test includes both the pollutant concentration in the test cell and the concentration in the atmosphere, the atmospheric pollutant concentration must be subtracted from the test measurement to obtain the corrected concentration test result. The corrected concentration is equal to the measured concentration minus the average of the atmospheric pollutant concentrations measured (without the test cell) immediately before and immediately after the test.

Design value means the calculated concentration according to the applicable appendix of part 50 of this chapter for the highest site in an attainment or nonattainment area.

EDO means environmental data operations.

Effective concentration pertains to testing an open path analyzer with a high-concentration calibration or audit standard gas contained in a short test cell inserted into the optical measurement beam of the instrument. Effective concentration is the equivalent ambient-level concentration that would produce the same spectral absorbance over the actual atmospheric monitoring path length as produced by the high-concentration gas in the short test cell. Quantitatively, effective concentration is equal to the actual concentration of the gas standard in the test cell multiplied by the ratio of the path length of the test cell to the actual atmospheric monitoring path length.

Federal equivalent method (FEM) means a method for measuring the concentration of an air pollutant in the ambient air that has been designated as an equivalent method in accordance with part 53 of this chapter; it does not include a method for which an equivalent method designation has been canceled in accordance with §53.11 or §53.16.

Federal reference method (FRM) means a method of sampling and analyzing the ambient air for an air pollutant that is specified as a reference method in an appendix to part 50 of this chapter, or a method that has been designated as a reference method in accordance with this part; it does not include a method for which a reference method designation has been canceled in accordance with §53.11 or §53.16 of this chapter.

HNO3 means nitric acid.

Implementation plan means an implementation plan approved or promulgated by the EPA pursuant to section 110 of the Act.

Local agency means any local government agency, other than the state agency, which is charged by a state with the responsibility for carrying out a portion of the annual monitoring network plan required by §58.10.

Meteorological measurements means measurements of wind speed, wind direction, barometric pressure, temperature, relative humidity, solar radiation, ultraviolet radiation, and/or precipitation that occur at SLAMS stations including the NCore and PAMS networks.

Metropolitan Statistical Area (MSA) means a CBSA associated with at least one urbanized area of 50,000 population or greater. The central-county, plus adjacent counties with a high degree of integration, comprise the area.

Monitor means an instrument, sampler, analyzer, or other device that measures or assists in the measurement of atmospheric air pollutants and which is acceptable for use in ambient air surveillance under the applicable provisions of appendix C to this part.

Monitoring agency means a state, local or tribal agency responsible for meeting the requirements of this part.

Monitoring organization means a monitoring agency responsible for operating a monitoring site for which the quality assurance regulations apply.

Monitoring path for an open path analyzer means the actual path in space between two geographical locations over which the pollutant concentration is measured and averaged.

Monitoring path length of an open path analyzer means the length of the monitoring path in the atmosphere over which the average pollutant concentration measurement (path-averaged concentration) is determined. See also, optical measurement path length.

Monitoring planning area (MPA) means a contiguous geographic area with established, well-defined boundaries, such as a CBSA, county or state, having a common area that is used for planning monitoring locations for PM2.5. A MPA may cross state boundaries, such as the Philadelphia PA-NJ MSA, and be further subdivided into community monitoring zones. The MPAs are generally oriented toward CBSAs or CSAs with populations greater than 200,000, but for convenience, those portions of a state that are not associated with CBSAs can be considered as a single MPA.

NATTS means the national air toxics trends stations. This network provides hazardous air pollution ambient data.

NCore means the National Core multipollutant monitoring stations. Monitors at these sites are required to measure particles (PM2.5 speciated PM2.5, PM10-2.5), O3, SO2, CO, nitrogen oxides (NO/NOy), and meteorology (wind speed, wind direction, temperature, relative humidity).

Near-road monitor means any approved monitor meeting the applicable specifications described in 40 CFR part 58, appendix D (sections 4.2.1, 4.3.2, 4.7.1(b)(2)) and appendix E (section 6.4(a), Table E-4) for near-road measurement of PM2.5, CO, or NO2.

Network means all stations of a given type or types.

Network Plan means the Annual Monitoring Network Plan described in §58.10.

NH3 means ammonia.

NO2 means nitrogen dioxide.

NO means nitrogen oxide.

NOX means the sum of the concentrations of NO2 and NO.

NOy means the sum of all total reactive nitrogen oxides, including NO, NO2, and other nitrogen oxides referred to as NOZ.

O3 means ozone.

Open path analyzer means an automated analytical method that measures the average atmospheric pollutant concentration in situ along one or more monitoring paths having a monitoring path length of 5 meters or more and that has been designated as a reference or equivalent method under the provisions of part 53 of this chapter.

Optical measurement path length means the actual length of the optical beam over which measurement of the pollutant is determined. The path-integrated pollutant concentration measured by the analyzer is divided by the optical measurement path length to determine the path-averaged concentration. Generally, the optical measurement path length is:

(1) Equal to the monitoring path length for a (bistatic) system having a transmitter and a receiver at opposite ends of the monitoring path;

(2) Equal to twice the monitoring path length for a (monostatic) system having a transmitter and receiver at one end of the monitoring path and a mirror or retroreflector at the other end; or

(3) Equal to some multiple of the monitoring path length for more complex systems having multiple passes of the measurement beam through the monitoring path.

PAMS means photochemical assessment monitoring stations.

Pb means lead.

PM means particulate matter, including but not limited to PM10, PM10C, PM2.5, and PM10-2.5.

PM2.5 means particulate matter with an aerodynamic diameter less than or equal to a nominal 2.5 micrometers as measured by a reference method based on appendix L of part 50 and designated in accordance with part 53 of this chapter, by an equivalent method designated in accordance with part 53, or by an approved regional method designated in accordance with appendix C to this part.

PM10 means particulate matter with an aerodynamic diameter less than or equal to a nominal 10 micrometers as measured by a reference method based on appendix J of part 50 of this chapter and designated in accordance with part 53 of this chapter or by an equivalent method designated in accordance with part 53.

PM10C means particulate matter with an aerodynamic diameter less than or equal to a nominal 10 micrometers as measured by a reference method based on appendix O of part 50 of this chapter and designated in accordance with part 53 of this chapter or by an equivalent method designated in accordance with part 53.

PM10−2.5 means particulate matter with an aerodynamic diameter less than or equal to a nominal 10 micrometers and greater than a nominal 2.5 micrometers as measured by a reference method based on appendix O to part 50 of this chapter and designated in accordance with part 53 of this chapter or by an equivalent method designated in accordance with part 53.

Point analyzer means an automated analytical method that measures pollutant concentration in an ambient air sample extracted from the atmosphere at a specific inlet probe point, and that has been designated as a reference or equivalent method in accordance with part 53 of this chapter.

Primary monitor means the monitor identified by the monitoring organization that provides concentration data used for comparison to the NAAQS. For any specific site, only one monitor for each pollutant can be designated in AQS as primary monitor for a given period of time. The primary monitor identifies the default data source for creating a combined site record for purposes of NAAQS comparisons.

Primary quality assurance organization (PQAO) means a monitoring organization, a group of monitoring organizations or other organization that is responsible for a set of stations that monitor the same pollutant and for which data quality assessments can be pooled. Each criteria pollutant sampler/monitor at a monitoring station must be associated with only one PQAO.

Probe means the actual inlet where an air sample is extracted from the atmosphere for delivery to a sampler or point analyzer for pollutant analysis.

PSD monitoring network means a set of stations that provide concentration information for a specific PSD permit.

PSD monitoring organization means a source owner/operator, a government agency, or a contractor of the source or agency that operates an ambient air pollution monitoring network for PSD purposes.

PSD reviewing authority means the state air pollution control agency, local agency, other state agency, tribe, or other agency authorized by the Administrator to carry out a permit program under §§51.165 and 51.166 of this chapter, or the Administrator in the case of EPA-implemented permit programs under §52.21 of this chapter.

PSD station means any station operated for the purpose of establishing the effect on air quality of the emissions from a proposed source for purposes of prevention of significant deterioration as required by §51.24(n) of this chapter.

Regional Administrator means the Administrator of one of the ten EPA Regional Offices or his or her authorized representative.

Reporting organization means an entity, such as a state, local, or tribal monitoring agency, that reports air quality data to the EPA.

Site means a geographic location. One or more stations may be at the same site.

SLAMS means state or local air monitoring stations. The SLAMS include the ambient air quality monitoring sites and monitors that are required by appendix D of this part and are needed for the monitoring objectives of appendix D, including NAAQS comparisons, but may serve other data purposes. The SLAMS includes NCore, PAMS, CSN, and all other state or locally operated criteria pollutant monitors, operated in accordance to this part, that have not been designated and approved by the Regional Administrator as SPM stations in an annual monitoring network plan.

SO2 means sulfur dioxide.

Special purpose monitor (SPM) station means a monitor included in an agency's monitoring network that the agency has designated as a special purpose monitor station in its annual monitoring network plan and in the AQS, and which the agency does not count when showing compliance with the minimum requirements of this subpart for the number and siting of monitors of various types. Any SPM operated by an air monitoring agency must be included in the periodic assessments and annual monitoring network plan required by §58.10 and approved by the Regional Administrator.

State agency means the air pollution control agency primarily responsible for development and implementation of a State Implementation Plan under the Act.

Station means a single monitor, or a group of monitors, located at a particular site.

STN station means a PM2.5 chemical speciation station designated to be part of the speciation trends network. This network provides chemical species data of fine particulate.

Supplemental speciation station means a PM2.5 chemical speciation station that is operated for monitoring agency needs and not part of the STN.

TSP (total suspended particulates) means particulate matter as measured by the method described in appendix B of Part 50.

Urbanized area means an area with a minimum residential population of at least 50,000 people and which generally includes core census block groups or blocks that have a population density of at least 1,000 people per square mile and surrounding census blocks that have an overall density of at least 500 people per square mile. The Census Bureau notes that under certain conditions, less densely settled territory may be part of each Urbanized Area.

VOCs means volatile organic compounds.

[81 FR 17276, Mar. 28, 2016; 89 FR 16388, March 6, 2024]

§58.2 Purpose.

(a) This part contains requirements for measuring ambient air quality and for reporting ambient air quality data and related information. The monitoring criteria pertain to the following areas:

(1) Quality assurance procedures for monitor operation and data handling.

(2) Methodology used in monitoring stations.

(3) Operating schedule.

(4) Siting parameters for instruments or instrument probes.

(5) Minimum ambient air quality monitoring network requirements used to provide support to the State implementation plans (SIP), national air quality assessments, and policy decisions. These minimums are described as part of the network design requirements, including minimum numbers and placement of monitors of each type.

(6) Air quality data reporting, and requirements for the daily reporting of an index of ambient air quality.

(b) The requirements pertaining to provisions for an air quality surveillance system in the SIP are contained in this part.

(c) This part also acts to establish a national ambient air quality monitoring network for the purpose of providing timely air quality data upon which to base national assessments and policy decisions.

§58.3 Applicability.

This part applies to:

(a) State air pollution control agencies.

(b) Any local air pollution control agency to which the State has delegated authority to operate a portion of the State's SLAMS network.

(c) Owners or operators of proposed sources.

Subpart B - Monitoring Network

§58.10 Annual monitoring network plan and periodic network assessment.

(a)(1) Beginning July 1, 2007, the State, or where applicable local, agency shall submit to the Regional Administrator an annual monitoring network plan which shall provide for the documentation of the establishment and maintenance of an air quality surveillance system that consists of a network of SLAMS monitoring stations that can include FRM and FEM monitors that are part of SLAMS, NCore, CSN, PAMS, and SPM stations. The plan shall include a statement of whether the operation of each monitor meets the requirements of appendices A, B, C, D, and E to this part, where applicable. The Regional Administrator may require additional information in support of this statement. The annual monitoring network plan must be made available for public inspection and comment for at least 30 days prior to submission to the EPA and the submitted plan shall include and address, as appropriate, any received comments.

(2) Any annual monitoring network plan that proposes network modifications (including new or discontinued monitoring sites, new determinations that data are not of sufficient quality to be compared to the NAAQS, and changes in identification of monitors as suitable or not suitable for comparison against the annual PM2.5 NAAQS) to SLAMS networks is subject to the approval of the EPA Regional Administrator, who shall approve or disapprove the plan within 120 days of submission of a complete plan to the EPA.

(3) The plan for establishing required NCore multipollutant stations shall be submitted to the Administrator not later than July 1, 2009. The plan shall provide for all required stations to be operational by January 1, 2011.

(4) A plan for establishing source-oriented Pb monitoring sites in accordance with the requirements of appendix D to this part for Pb sources emitting 1.0 tpy or greater shall be submitted to the EPA Regional Administrator no later than July 1, 2009, as part of the annual network plan required in paragraph (a)(1) of this section. The plan shall provide for the required source-oriented Pb monitoring sites for Pb sources emitting 1.0 tpy or greater to be operational by January 1, 2010. A plan for establishing source-oriented Pb monitoring sites in accordance with the requirements of appendix D to this part for Pb sources emitting equal to or greater than 0.50 tpy but less than 1.0 tpy shall be submitted to the EPA Regional Administrator no later than July 1, 2011. The plan shall provide for the required source-oriented Pb monitoring sites for Pb sources emitting equal to or greater than 0.50 tpy but less than 1.0 tpy to be operational by December 27, 2011.

(5)(i) A plan for establishing or identifying an area-wide NO2 monitor, in accordance with the requirements of Appendix D, section 4.3.3 to this part, shall be submitted as part of the Annual Monitoring Network Plan to the EPA Regional Administrator by July 1, 2012. The plan shall provide for these required monitors to be operational by January 1, 2013.

(ii) A plan for establishing or identifying any NO2 monitor intended to characterize vulnerable and susceptible populations, as required in Appendix D, section 4.3.4 to this part, shall be submitted as part of the Annual Monitoring Network Plan to the EPA Regional Administrator by July 1, 2012. The plan shall provide for these required monitors to be operational by January 1, 2013.

(iii) A plan for establishing a single near-road NO2 monitor in CBSAs having 1,000,000 or more persons, in accordance with the requirements of Appendix D, section 4.3.2 to this part, shall be submitted as part of the Annual Monitoring Network Plan to the EPA Regional Administrator by July 1, 2013. The plan shall provide for these required monitors to be operational by January 1, 2014.

(iv) A plan for establishing a second near-road NO2 monitor in any CBSA with a population of 2,500,000 persons or more, or a second monitor in any CBSA with a population of 1,000,000 or more persons that has one or more roadway segments with 250,000 or greater AADT counts, in accordance with the requirements of appendix D, section 4.3.2 to this part, shall be submitted as part of the Annual Monitoring Network Plan to the EPA Regional Administrator by July 1, 2014. The plan shall provide for these required monitors to be operational by January 1, 2015.

(6) A plan for establishing SO2 monitoring sites in accordance with the requirements of appendix D to this part shall be submitted to the EPA Regional Administrator by July 1, 2011 as part of the annual network plan required in paragraph (a) (1). The plan shall provide for all required SO2 monitoring sites to be operational by January 1, 2013.

(7) A plan for establishing CO monitoring sites in accordance with the requirements of appendix D to this part shall be submitted to the EPA Regional Administrator. Plans for required CO monitors shall be submitted at least six months prior to the date such monitors must be established as required by section 58.13.

(8)(i) A plan for establishing near-road PM 2.5 monitoring sites in CBSAs having 2.5 million or more persons, in accordance with the requirements of appendix D to this part, shall be submitted as part of the annual monitoring network plan to the EPA Regional Administrator by July 1, 2014. The plan shall provide for these required monitoring stations to be operational by January 1, 2015.

(ii) A plan for establishing near-road PM 2.5 monitoring sites in CBSAs having 1 million or more persons, but less than 2.5 million persons, in accordance with the requirements of appendix D to this part, shall be submitted as part of the annual monitoring network plan to the EPA Regional Administrator by July 1, 2016. The plan shall provide for these required monitoring stations to be operational by January 1, 2017.

(9) The annual monitoring network plan shall provide for the required O3 sites to be operating on the first day of the applicable required O3 monitoring season in effect on January 1, 2017 as listed in Table D-3 of appendix D of this part.

(10) A plan for making Photochemical Assessment Monitoring Stations (PAMS) measurements, if applicable, in accordance with the requirements of appendix D paragraph 5(a) of this part shall be submitted to the EPA Regional Administrator no later than July 1, 2018. The plan shall provide for the required PAMS measurements to begin by June 1, 2019.

(11) An Enhanced Monitoring Plan for O3, if applicable, in accordance with the requirements of appendix D paragraph 5(h) of this part shall be submitted to the EPA Regional Administrator no later than October 1, 2019 or two years following the effective date of a designation to a classification of Moderate or above O3 nonattainment, whichever is later.

(12) A detailed description of the PAMS network being operated in accordance with the requirements of appendix D to this part shall be submitted as part of the annual monitoring network plan for review by the EPA Administrator. The PAMS Network Description described in section 5 of appendix D may be used to meet this requirement.

(b) The annual monitoring network plan must contain the following information for each existing and proposed site:

(1) The AQS site identification number.

(2) The location, including street address and geographical coordinates.

(3) The sampling and analysis method(s) for each measured parameter.

(4) The operating schedules for each monitor.

(5) Any proposals to remove or move a monitoring station within a period of 18 months following plan submittal.

(6) The monitoring objective and spatial scale of representativeness for each monitor as defined in appendix D to this part.

(7) The identification of any sites that are suitable and sites that are not suitable for comparison against the annual PM 2.5 NAAQS as described in §58.30.

(8) The MSA, CBSA, CSA or other area represented by the monitor.

(9) The designation of any Pb monitors as either source-oriented or non-source-oriented according to Appendix D to 40 CFR part 58.

(b)(10) Any monitors for which a waiver has been requested or granted by the EPA Regional Administrator as allowed for under appendix D or appendix E to this part. For those monitors where a waiver has been approved, the annual monitoring network plan shall include the date the waiver was approved.

(11) Any source-oriented or non-source-oriented site for which a waiver has been requested or granted by the EPA Regional Administrator for the use of Pb-PM 10 monitoring in lieu of Pb-TSP monitoring as allowed for under paragraph 2.10 of Appendix C to 40 CFR part 58.

(12) The identification of required NO2 monitors as near-road, area-wide, or vulnerable and susceptible population monitors in accordance with Appendix D, section 4.3 of this part.

(b)(13) The identification of any PM 2.5 FEMs used in the monitoring agency's network where the data are not of sufficient quality such that data are not to be compared to the national ambient air quality standards (NAAQS). For required SLAMS where the agency identifies that the PM 2.5 Class III FEM does not produce data of sufficient quality for comparison to the NAAQS, the monitoring agency must ensure that an operating FRM or filter-based FEM meeting the sample frequency requirements described in §58.12 or other Class III PM 2.5 FEM with data of sufficient quality is operating and reporting data to meet the network design criteria described in appendix D to this part.

(14) The identification of any site(s) intended to address being sited in an at-risk community where there are anticipated effects from sources in the area as required in section 4.7.1(b)(3) of appendix D to this part. An initial approach to the question of whether any new or moved sites are needed and to identify the communities in which they intend to add monitoring for meeting the requirement in this paragraph (b)(14), if applicable, shall be submitted in accordance with the requirements of section 4.7.1(b)(3) of appendix D to this part, which includes submission to the EPA Regional Administrator no later than July 1, 2024. Specifics on the resulting proposed new or moved sites for PM 2.5 network design to address at-risk communities, if applicable, would need to be detailed in annual monitoring network plans due to each applicable EPA Regional office no later than July 1, 2025. The plan shall provide for any required sites to be operational no later than 24 months from date of approval of a plan or January 1, 2027, whichever comes first.

(c) The annual monitoring network plan must document how state and local agencies provide for the review of changes to a PM 2.5 monitoring network that impact the location of a violating PM 2.5 monitor. The affected state or local agency must document the process for obtaining public comment and include any comments received through the public notification process within their submitted plan.

(d) The State, or where applicable local, agency shall perform and submit to the EPA Regional Administrator an assessment of the air quality surveillance system every 5 years to determine, at a minimum, if the network meets the monitoring objectives defined in appendix D to this part, whether new sites are needed, whether existing sites are no longer needed and can be terminated, and whether new technologies are appropriate for incorporation into the ambient air monitoring network. The network assessment must consider the ability of existing and proposed sites to support air quality characterization for areas with relatively high populations of susceptible individuals (e.g., children with asthma) and other at-risk populations, and, for any sites that are being proposed for discontinuance, the effect on data users other than the agency itself, such as nearby States and Tribes or health effects studies. The State, or where applicable local, agency must submit a copy of this 5-year assessment, along with a revised annual network plan, to the Regional Administrator. The assessments are due every 5 years beginning July 1, 2010.

(e) All proposed additions and discontinuations of SLAMS monitors in annual monitoring network plans and periodic network assessments are subject to approval according to §58.14.

[71 FR 61298, Oct. 17, 2006, as amended at 72 FR 32210, June 12, 2007; 73 FR 67059, Nov. 12, 2008; 73 FR 77517, Dec. 19, 2008; 75 FR 6534, Feb. 9, 2010; 75 FR 35601, June 22, 2010; 75 FR 81137, Dec. 27, 2010; 76 FR 54341, Aug. 31, 2011; 78 FR 16188, Mar. 14, 2013; 78 FR 3282, Jan. 15, 2013; 80 FR 65466, Oct. 26, 2015; 81 FR 17279, Mar. 28, 2016; 81 FR 96388, Dec. 30, 2016; 89 FR 16388, March 6, 2024]

§58.11 Network technical requirements.

(a)(1) State and local governments shall follow the applicable quality assurance criteria contained in appendix A to this part when operating the SLAMS networks.

(2) Beginning January 1, 2009, State and local governments shall follow the quality assurance criteria contained in appendix A to this part that apply to SPM sites when operating any SPM site which uses an FRM or an FEM and meets the requirements of appendix E to this part, unless the Regional Administrator approves an alternative to the requirements of appendix A with respect to such SPM sites because meeting those requirements would be physically and/or financially impractical due to physical conditions at the monitoring site and the requirements are not essential to achieving the intended data objectives of the SPM site. Alternatives to the requirements of appendix A may be approved for an SPM site as part of the approval of the annual monitoring plan, or separately.

(3) The owner or operator of an existing or a proposed source shall follow the quality assurance criteria in appendix B to this part that apply to PSD monitoring when operating a PSD site.

(b) State and local governments must follow the criteria in appendix C to this part to determine acceptable monitoring methods or instruments for use in SLAMS networks. Appendix C criteria are optional at SPM stations.

(c) State and local governments must follow the network design criteria contained in appendix D to this part in designing and maintaining the SLAMS stations. The final network design and all changes in design are subject to approval of the Regional Administrator. NCore and STN network design and changes are also subject to approval of the Administrator. Changes in SPM stations do not require approvals, but a change in the designation of a monitoring site from SLAMS to SPM requires approval of the Regional Administrator.

(d) State and local governments must follow the criteria contained in appendix E to this part for siting monitor inlets, paths or probes at SLAMS stations. Appendix E adherence is optional for SPM stations.

(e) State and local governments must assess data from Class III PM 2.5 FEM monitors operated within their network using the performance criteria described in table C–4 to subpart C of part 53 of this chapter, for cases where the data are identified as not of sufficient comparability to a collocated FRM, and the monitoring agency requests that the FEM data should not be used in comparison to the NAAQS. These assessments are required in the monitoring agency's annual monitoring network plan described in §58.10(b) for cases where the FEM is identified as not of sufficient comparability to a collocated FRM. For these collocated PM 2.5 monitors, the performance criteria apply with the following additional provisions:

(1) The acceptable concentration range (Rj), µg/m 3 may include values down to 0 µg/m 3 .

(2) The minimum number of test sites shall be at least one; however, the number of test sites will generally include all locations within an agency's network with collocated FRMs and FEMs.

(3) The minimum number of methods shall include at least one FRM and at least one FEM.

(4) Since multiple FRMs and FEMs may not be present at each site, the precision statistic requirement does not apply, even if precision data are available.

(5) All seasons must be covered with no more than 36 consecutive months of data in total aggregated together.

(6) The key statistical metric to include in an assessment is the bias (both additive and multiplicative) of the PM 2.5 continuous FEM(s) compared to a collocated FRM(s). Correlation is required to be reported in the assessment, but failure to meet the correlation criteria, by itself, is not cause to exclude data from a continuous FEM monitor.

[71 FR 61298, Oct. 17, 2006, as amended at 78 FR 3282, Jan. 15, 2013; 80 FR 65466, Oct. 26, 2015; 81 FR 17279, Mar. 28, 2016; 89 FR 16389, March 6, 2024]

§58.12 Operating schedules.

State and local governments shall collect ambient air quality data at any SLAMS station on the following operational schedules:

(a) For continuous analyzers, consecutive hourly averages must be collected except during:

(1) Periods of routine maintenance,

(2) Periods of instrument calibration, or

(3) Periods or monitoring seasons exempted by the Regional Administrator.

(b) For Pb manual methods, at least one 24-hour sample must be collected every 6 days except during periods or seasons exempted by the Regional Administrator.

(c) For PAMS VOC samplers, samples must be collected as specified in section 5 of appendix D to this part. Area-specific PAMS operating schedules must be included as part of the PAMS network description and must be approved by the Regional Administrator.

(d) For manual PM 2.5 samplers:

(1)(i) Manual PM 2.5 samplers at required SLAMS stations without a collocated continuously operating PM 2.5 monitor must operate on at least a 1-in-3 day schedule unless a waiver for an alternative schedule has been approved per paragraph (d)(1)(ii) of this section.

(ii) For SLAMS PM 2.5 sites with both manual and continuous PM 2.5 monitors operating, the monitoring agency may request approval for a reduction to 1-in-6 day PM 2.5 sampling or for seasonal sampling from the EPA Regional Administrator. Other requests for a reduction to 1-in-6 day PM 2.5 sampling or for seasonal sampling may be approved on a case-by-case basis. The EPA Regional Administrator may grant sampling frequency reductions after consideration of factors (including but not limited to the historical PM 2.5 data quality assessments, the location of current PM 2.5 design value sites, and their regulatory data needs) if the Regional Administrator determines that the reduction in sampling frequency will not compromise data needed for implementation of the NAAQS. Required SLAMS stations whose measurements determine the design value for their area and that are within plus or minus 10 percent of the annual NAAQS, and all required sites where one or more 24-hour values have exceeded the 24-hour NAAQS each year for a consecutive period of at least 3 years are required to maintain at least a 1-in-3 day sampling frequency until the design value no longer meets the criteria in this paragraph (d)(1)(ii) for 3 consecutive years. A continuously operating FEM PM 2.5 monitor satisfies the requirement in this paragraph (d)(1)(ii) unless it is identified in the monitoring agency's annual monitoring network plan as not appropriate for comparison to the NAAQS and the EPA Regional Administrator has approved that the data from that monitor may be excluded from comparison to the NAAQS.

(iii) Required SLAMS stations whose measurements determine the 24-hour design value for their area and whose data are within plus or minus 5 percent of the level of the 24-hour PM 2.5 NAAQS must have an FRM or FEM operate on a daily schedule if that area's design value for the annual NAAQS is less than the level of the annual PM 2.5 standard. A continuously operating FEM or PM 2.5 monitor satisfies the requirement in this paragraph (d)(1)(iii) unless it is identified in the monitoring agency's annual monitoring network plan as not appropriate for comparison to the NAAQS and the EPA Regional Administrator has approved that the data from that monitor may be excluded from comparison to the NAAQS. The daily schedule must be maintained until the referenced design values no longer meets the criteria in this paragraph (d)(1)(iii) for 3 consecutive years.

(iv) Changes in sampling frequency attributable to changes in design values shall be implemented no later than January 1 of the calendar year following the certification of such data as described in §58.15.

(2) Manual PM 2.5 samplers at NCore stations and required regional background and regional transport sites must operate on at least a 1-in-3 day sampling frequency.

(3) Manual PM2.5 speciation samplers at STN stations must operate on at least a 1-in-3 day sampling frequency unless a reduction in sampling frequency has been approved by the EPA Administrator based on factors such as area's design value, the role of the particular site in national health studies, the correlation of the site's species data with nearby sites, and presence of other leveraged measurements.

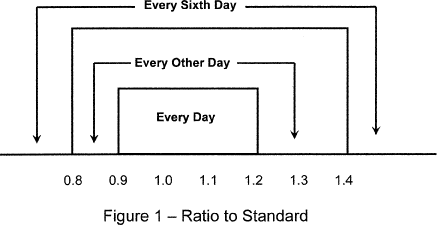

(e) For PM 10 samplers, a 24-hour sample must be taken from midnight to midnight (local standard time) to ensure national consistency. The minimum monitoring schedule for the site in the area of expected maximum concentration shall be based on the relative level of that monitoring site concentration with respect to the 24-hour standard as illustrated in Figure 1. If the operating agency demonstrates by monitoring data that during certain periods of the year conditions preclude violation of the PM 10 24-hour standard, the increased sampling frequency for those periods or seasons may be exempted by the Regional Administrator and permitted to revert back to once in six days. The minimum sampling schedule for all other sites in the area remains once every six days. No less frequently than as part of each 5-year network assessment, the most recent year of data must be considered to estimate the air quality status at the site near the area of maximum concentration. Statistical models such as analysis of concentration frequency distributions as described in “Guideline for the Interpretation of Ozone Air Quality Standards,” EPA-450/479-003, U.S. Environmental Protection Agency, Research Triangle Park, NC, January 1979, should be used. Adjustments to the monitoring schedule must be made on the basis of the 5-year network assessment. The site having the highest concentration in the most current year must be given first consideration when selecting the site for the more frequent sampling schedule. Other factors such as major change in sources of PM 10 emissions or in sampling site characteristics could influence the location of the expected maximum concentration site. Also, the use of the most recent 3 years of data might, in some cases, be justified in order to provide a more representative database from which to estimate current air quality status and to provide stability to the network. This multiyear consideration reduces the possibility of an anomalous year biasing a site selected for accelerated sampling. If the maximum concentration site based on the most current year is not selected for the more frequent operating schedule, documentation of the justification for selection of an alternative site must be submitted to the Regional Office for approval during the 5-year network assessment process. Minimum data completeness criteria, number of years of data and sampling frequency for judging attainment of the NAAQS are discussed in appendix K of part 50 of this chapter.

(f) For manual PM 10-2.5 samplers:

(1) Manual PM 10-2.5 samplers at NCore stations must operate on at least a 1-in-3 day schedule at sites without a collocated continuously operating federal equivalent PM 10-2.5 method that has been designated in accordance with part 53 of this chapter.

(2) [Reserved]

(g) For continuous SO2 analyzers, the maximum 5-minute block average concentration of the twelve 5-minute blocks in each hour must be collected except as noted in §58.12 (a).

[71 FR 61298, Oct. 17, 2006, as amended at 72 FR 32210, June 12, 2007; 75 FR 35601, June 22, 2010; 78 FR 3282, Jan. 15, 2013; 81 FR 17279, Mar. 28, 2016; 89 FR 16389, March 6, 2024]

§58.13 Monitoring network completion.

(a) The network of NCore multipollutant sites must be physically established no later than January 1, 2011, and at that time, operating under all of the requirements of this part, including the requirements of appendices A, C, D, E, and G to this part. NCore sites required to conduct Pb monitoring as required under 40 CFR part 58 appendix D paragraph 3(b), or approved alternative non-source-oriented Pb monitoring sites, shall begin Pb monitoring in accordance with all of the requirements of this part, including the requirements of appendices A, C, D, E, and G to this part no later than December 27, 2011.

(b) Not withstanding specific dates included in this part, beginning January 1, 2008, when existing networks are not in conformance with the minimum number of required monitors specified in this part, additional required monitors must be identified in the next applicable annual monitoring network plan, with monitoring operation beginning by January 1 of the following year. To allow sufficient time to prepare and comment on Annual Monitoring Network Plans, only monitoring requirements effective 120 days prior to the required submission date of the plan (i.e., 120 days prior to July 1 of each year) shall be included in that year's annual monitoring network plan.

(c) The NO2 monitors required under Appendix D, section 4.3 of this part must be physically established and operating under all of the requirements of this part, including the requirements of appendices A, C, D, and E to this part, no later than:

(1) January 1, 2013, for area-wide NO2 monitors required in Appendix D, section 4.3.3;

(2) January 1, 2013, for NO2 monitors intended to characterize vulnerable and susceptible populations that are required in Appendix D, section 4.3.4;

(3) January 1, 2014, for an initial near-road NO2 monitor in CBSAs having 1,000,000 million or more persons that is required in Appendix D, section 4.3.2;

(4) January 1, 2015, for a second near-road NO2 monitor in CBSAs that have a population of 2,500,000 or more persons or a second monitor in any CBSA with a population of 1,000,000 or more persons that has one or more roadway segments with 250,000 or greater AADT counts that is required in appendix D, section 4.3.2.

(d) The network of SO2 monitors must be physically established no later than January 1, 2013, and at that time, must be operating under all of the requirements of this part, including the requirements of appendices A, C, D, and E to this part.

(e) The CO monitors required under Appendix D, section 4.2 of this part must be physically established and operating under all of the requirements of this part, including the requirements of appendices A, C, D, and E to this part, no later than:

(1) January 1, 2015 for CO monitors in CBSAs having 2.5 million persons or more; or

(2) January 1, 2017 for other CO monitors.

(f) PM 2.5 monitors required in near-road environments as described into this part, must be physically established and operating under all of the requirements of this part, including the requirements of appendices A, C, D, and E to this part, no later than:

(1) January 1, 2015 for PM 2.5 monitors in CBSAs having 2.5 million persons or more; or

(2) January 1, 2017 for PM 2.5 monitors in CBSAs having 1 million or more, but less than 2.5 million persons.

(g) The O3 monitors required under appendix D, section 4.1 of this part must operate on the first day of the applicable required O3 monitoring season in effect January 1, 2017.

(h) The Photochemical Assessment Monitoring sites required under appendix D of this part, section 5(a), must be physically established and operating under all of the requirements of this part, including the requirements of appendix A, C, D, and E of this part, no later than June 1, 2021.

[71 FR 61298, Oct. 17, 2006, as amended at 73 FR 67059, Nov. 12, 2008; 75 FR 6534, Feb. 9, 2010; 75 FR 35601, June 22, 2010; 75 FR 81137, Dec. 27, 2010; 76 FR 54341, Aug. 31, 2011; 78 FR 16188, Mar. 14, 2013; 78 FR 3283, Jan. 15, 2013; 80 FR 65466, Oct. 26, 2015; 81 FR 96388, Dec. 30, 2016; 85 FR 837, Jan. 8, 2020]

§58.14 System modification.

(a) The state, or where appropriate local, agency shall develop a network modification plan and schedule to modify the ambient air quality monitoring network that addresses the findings of the network assessment required every 5 years by §58.10(d). The network modification plan shall be submitted as part of the Annual Monitoring Network Plan that is due no later than the year after submittal of the network assessment.

(b) Nothing in this section shall preclude the State, or where appropriate local, agency from making modifications to the SLAMS network for reasons other than those resulting from the periodic network assessments. These modifications must be reviewed and approved by the Regional Administrator. Each monitoring network may make or be required to make changes between the 5-year assessment periods, including for example, site relocations or the addition of PAMS networks in bumped-up ozone nonattainment areas. These modifications must address changes invoked by a new census and changes due to changing air quality levels. The State, or where appropriate local, agency shall provide written communication describing the network changes to the Regional Administrator for review and approval as these changes are identified.

(c) State, or where appropriate, local agency requests for SLAMS monitor station discontinuation, subject to the review of the Regional Administrator, will be approved if any of the following criteria are met and if the requirements ofto this part, if any, continue to be met. Other requests for discontinuation may also be approved on a case-by-case basis if discontinuance does not compromise data collection needed for implementation of a NAAQS and if the requirements ofto this part, if any, continue to be met.

(1) Any PM 2.5, O3, CO, PM 10, SO2, Pb, or NO2 SLAMS monitor which has shown attainment during the previous five years, that has a probability of less than 10 percent of exceeding 80 percent of the applicable NAAQS during the next three years based on the levels, trends, and variability observed in the past, and which is not specifically required by an attainment plan or maintenance plan. In a nonattainment or maintenance area, if the most recent attainment or maintenance plan adopted by the State and approved by EPA contains a contingency measure to be triggered by an air quality concentration and the monitor to be discontinued is the only SLAMS monitor operating in the nonattainment or maintenance area, the monitor may not be discontinued.

(2) Any SLAMS monitor for CO, PM 10, SO2, or NO2 which has consistently measured lower concentrations than another monitor for the same pollutant in the same county (or portion of a county within a distinct attainment area, nonattainment area, or maintenance area, as applicable) during the previous five years, and which is not specifically required by an attainment plan or maintenance plan, if control measures scheduled to be implemented or discontinued during the next five years would apply to the areas around both monitors and have similar effects on measured concentrations, such that the retained monitor would remain the higher reading of the two monitors being compared.

(3) For any pollutant, any SLAMS monitor in a county (or portion of a county within a distinct attainment, nonattainment, or maintenance area, as applicable) provided the monitor has not measured violations of the applicable NAAQS in the previous five years, and the approved SIP provides for a specific, reproducible approach to representing the air quality of the affected county in the absence of actual monitoring data.

(4) A PM 2.5 SLAMS monitor which EPA has determined cannot be compared to the relevant NAAQS because of the siting of the monitor, in accordance with §58.30.

(5) A SLAMS monitor that is designed to measure concentrations upwind of an urban area for purposes of characterizing transport into the area and that has not recorded violations of the relevant NAAQS in the previous five years, if discontinuation of the monitor is tied to start-up of another station also characterizing transport.

(6) A SLAMS monitor not eligible for removal under any of the criteria in paragraphs (c)(1) through (c)(5) of this section may be moved to a nearby location with the same scale of representation if logistical problems beyond the State's control make it impossible to continue operation at its current site.

[71 FR 61298, Oct. 17, 2006, as amended at 81 FR 17280, Mar. 28, 2016]

§58.15 Annual air monitoring data certification.

(a) The State, or where appropriate local, agency shall submit to the EPA Regional Administrator an annual air monitoring data certification letter to certify data collected by FRM and FEM monitors at SLAMS and SPM sites that meet criteria in appendix A to this part from January 1 to December 31 of the previous year. The head official in each monitoring agency, or his or her designee, shall certify that the previous year of ambient concentration and quality assurance data are completely submitted to AQS and that the ambient concentration data are accurate to the best of her or his knowledge, taking into consideration the quality assurance findings. The annual data certification letter is due by May 1 of each year.

(b) Along with each certification letter, the State shall submit to the Regional Administrator an annual summary report of all the ambient air quality data collected by FRM and FEM monitors at SLAMS and SPM sites. The annual report(s) shall be submitted for data collected from January 1 to December 31 of the previous year. The annual summary serves as the record of the specific data that is the object of the certification letter.

(c) Along with each certification letter, the State shall submit to the Regional Administrator a summary of the precision and accuracy data for all ambient air quality data collected by FRM and FEM monitors at SLAMS and SPM sites. The summary of precision and accuracy shall be submitted for data collected from January 1 to December 31 of the previous year.

[81 FR 17280, Mar. 28, 2016; 89 FR 16383, March 6, 2024]

§58.16 Data submittal and archiving requirements.

(a) The state, or where appropriate, local agency, shall report to the Administrator, via AQS all ambient air quality data and associated quality assurance data for SO2; CO; O3; NO2; NO; NOy; NOX; Pb-TSP mass concentration; Pb-PM10 mass concentration; PM10 mass concentration; PM2.5 mass concentration; for filter-based PM2.5 FRM/FEM, the field blank mass; chemically speciated PM2.5 mass concentration data; PM10-2.5 mass concentration; meteorological data from NCore and PAMS sites; and metadata records and information specified by the AQS Data Coding Manual (https://www.epa.gov/sites/production/files/2015-09/documents/aqs_data_coding_manual_0.pdf). Air quality data and information must be submitted directly to the AQS via electronic transmission on the specified schedule described in paragraphs (b) and (d) of this section.

(b) The specific quarterly reporting periods are January 1-March 31, April 1-June 30, July 1-September 30, and October 1-December 31. The data and information reported for each reporting period must contain all data and information gathered during the reporting period, and be received in the AQS within 90 days after the end of the quarterly reporting period. For example, the data for the reporting period January 1-March 31 are due on or before June 30 of that year.

(c) Air quality data submitted for each reporting period must be edited, validated, and entered into the AQS (within the time limits specified in paragraphs (b) and (d) of this section) pursuant to appropriate AQS procedures. The procedures for editing and validating data are described in the AQS Data Coding Manual and in each monitoring agency's quality assurance project plan.

(d) The state shall report VOC and if collected, carbonyl, NH3, and HNO3 data from PAMS sites, and chemically speciated PM2.5 mass concentration data to AQS within 6 months following the end of each quarterly reporting period listed in paragraph (b) of this section.

(e) The State shall also submit any portion or all of the SLAMS and SPM data to the appropriate Regional Administrator upon request.

(f) The state, or where applicable, local agency shall archive all PM 2.5, PM 10, and PM 10-2.5 filters from manual low-volume samplers (samplers having flow rates less than 200 liters/minute) from all SLAMS sites for a minimum period of 5 years after collection. These filters shall be made available for supplemental analyses, including destructive analyses if necessary, at the request of EPA or to provide information to state and local agencies on particulate matter composition. Other Federal agencies may request access to filters for purposes of supporting air quality management or community health - such as biological assay - through the applicable EPA Regional Administrator. The filters shall be archived according to procedures approved by the Administrator, which shall include cold storage of filters after post-sampling laboratory analyses for at least 12 months following field sampling. The EPA recommends that particulate matter filters be archived for longer periods, especially for key sites in making NAAQS-related decisions or for supporting health-related air pollution studies.

(g) Any State or, where applicable, local agency operating a continuous SO2 analyzer shall report the maximum 5-minute SO2 block average of the twelve 5-minute block averages in each hour, in addition to the hourly SO2 average.

[71 FR 61298, Oct. 17, 2006, as amended at 73 FR 67059, Nov. 12, 2008; 75 FR 6534, Feb. 9, 2010; 75 FR 35602, June 22, 2010; 78 FR 3283, Jan. 15, 2013; 81 FR 17280, Mar. 28, 2016]

Subpart C - Special Purpose Monitors

§58.20 Special purpose monitors (SPM).

(a) An SPM is defined as any monitor included in an agency's monitoring network that the agency has designated as a special purpose monitor in its annual monitoring network plan and in AQS, and which the agency does not count when showing compliance with the minimum requirements of this subpart for the number and siting of monitors of various types. Any SPM operated by an air monitoring agency must be included in the periodic assessments and annual monitoring network plan required by §58.10. The plan shall include a statement of purposes for each SPM monitor and evidence that operation of each monitor meets the requirements ofor an approved alternative as provided by §58.11(a)(2) where applicable. The monitoring agency may designate a monitor as an SPM after January 1, 2007 only if it is a new monitor, i.e., a SLAMS monitor that is not included in the currently applicable monitoring plan or, for a monitor included in the monitoring plan prior to January 1, 2007, if the Regional Administrator has approved the discontinuation of the monitor as a SLAMS site.

(b) Any SPM data collected by an air monitoring agency using a Federal reference method (FRM) or Federal equivalent method (FEM) must meet the requirements of §§58.11 and 58.12 and appendix A to this part or an approved alternative to appendix A. Compliance with appendix E to this part is optional but encouraged except when the monitoring agency's data objectives are inconsistent with the requirements in appendix E. Data collected at an SPM using a FRM or FEM meeting the requirements of appendix A must be submitted to AQS according to the requirements of §58.16. Data collected by other SPMs may be submitted. The monitoring agency must also submit to AQS an indication of whether each SPM reporting data to AQS monitor meets the requirements of appendices A and E.

(c) All data from an SPM using an FRM or FEM which has operated for more than 24 months are eligible for comparison to the relevant NAAQS, subject to the conditions of §§58.11(e) and 58.30, unless the air monitoring agency demonstrates that the data came from a particular period during which the requirements of appendix A, appendix C, or appendix E to this part were not met, subject to review and EPA Regional Office approval as part of the annual monitoring network plan described in §58.10.

(d) If an SPM using an FRM or FEM is discontinued within 24 months of start-up, the Administrator will not base a NAAQS violation determination for the PM 2.5 or ozone NAAQS solely on data from the SPM.

(e) If an SPM using an FRM or FEM is discontinued within 24 months of start-up, the Administrator will not designate an area as nonattainment for the CO, SO 2 , NO 2 , or 24-hour PM 10 NAAQS solely on the basis of data from the SPM. Such data are eligible for use in determinations of whether a nonattainment area has attained one of these NAAQS.

(f) Prior approval from EPA is not required for discontinuance of an SPM.

[71 FR 61298, Oct. 17, 2006, as amended at 72 FR 32210, June 12, 2007; 73 FR 67060, Nov. 12, 2008; 78 FR 3283, Jan. 15, 2013; 89 FR 16390, March 6, 2024]

Subpart D - Comparability of Ambient Data to the NAAQS

§58.30 Special considerations for data comparisons to the NAAQS.

(a) Comparability of PM 2.5data. The primary and secondary annual and 24-hour PM 2.5 NAAQS are described in part 50 of this chapter. Monitors that follow the network technical requirements specified in §58.11 are eligible for comparison to the NAAQS subject to the additional requirements of this section. PM 2.5 measurement data from all eligible monitors are comparable to the 24-hour PM 2.5 NAAQS. PM 2.5 measurement data from all eligible monitors that are representative of area-wide air quality are comparable to the annual PM 2.5 NAAQS. Consistent with appendix D to this part, section 4.7.1, when micro- or middle-scale PM 2.5 monitoring sites collectively identify a larger region of localized high ambient PM 2.5 concentrations, such sites would be considered representative of an area-wide location and, therefore, eligible for comparison to the annual PM 2.5 NAAQS. PM 2.5 measurement data from monitors that are not representative of area-wide air quality but rather of relatively unique micro-scale, or localized hot spot, or unique middle-scale impact sites are not eligible for comparison to the annual PM 2.5 NAAQS. PM 2.5 measurement data from these monitors are eligible for comparison to the 24-hour PM 2.5 NAAQS. For example, if a micro- or middle-scale PM 2.5 monitoring site is adjacent to a unique dominating local PM 2.5 source, then the PM 2.5 measurement data from such a site would only be eligible for comparison to the 24-hour PM 2.5 NAAQS. Approval of sites that are suitable and sites that are not suitable for comparison with the annual PM 2.5 NAAQS is provided for as part of the annual monitoring network plan described in §58.10.

(b) [Reserved]

[71 FR 61302, Oct. 17, 2006, as amended at 78 FR 3283, Jan. 15, 2013]

Subpart E [Reserved]

Subpart F - Air Quality Index Reporting

§58.50 Index reporting.

(a) The State or where applicable, local agency shall report to the general public on a daily basis through prominent notice an air quality index that complies with the requirements of appendix G to this part.

(b) Reporting is required for all individual MSA with a population exceeding 350,000.

(c) The population of a metropolitan statistical area for purposes of index reporting is the latest available U.S. census population.

[71 FR 61302, Oct. 17, 2006, as amended at 80 FR 65466, Oct. 26, 2015]

Subpart G - Federal Monitoring

§58.60 Federal monitoring.

The Administrator may locate and operate an ambient air monitoring site if the State or local agency fails to locate, or schedule to be located, during the initial network design process, or as a result of the 5-year network assessments required in §58.10, a SLAMS station at a site which is necessary in the judgment of the Regional Administrator to meet the objectives defined in appendix D to this part.

[71 FR 61303, Oct. 17, 2006]

§58.61 Monitoring other pollutants.

The Administrator may promulgate criteria similar to that referenced in subpart B of this part for monitoring a pollutant for which an NAAQS does not exist. Such an action would be taken whenever the Administrator determines that a nationwide monitoring program is necessary to monitor such a pollutant.

[71 FR 61303, Oct. 17, 2006]

Appendix A to Part 58 - Quality Assurance Requirements for Monitors used in Evaluations of National Ambient Air Quality Standards

1. General Information

2. Quality System Requirements

3. Measurement Quality Check Requirements

4. Calculations for Data Quality Assessments

5. Reporting Requirements

6. References

1. General Information

1.1 Applicability. (a) This appendix specifies the minimum quality system requirements applicable to SLAMS and other monitor types whose data are intended to be used to determine compliance with the NAAQS (e.g., SPMs, tribal, CASTNET, NCore, industrial, etc.), unless the EPA Regional Administrator has reviewed and approved the monitor for exclusion from NAAQS use and these quality assurance requirements.

(b) Primary quality assurance organizations are encouraged to develop and maintain quality systems more extensive than the required minimums. Additional guidance for the requirements reflected in this appendix can be found in the “Quality Assurance Handbook for Air Pollution Measurement Systems,” Volume II (see reference 10 of this appendix) and at a national level in references 1, 2, and 3 of this appendix.

1.2 Primary Quality Assurance Organization (PQAO). A PQAO is defined as a monitoring organization or a group of monitoring organizations or other organization that is responsible for a set of stations that monitors the same pollutant and for which data quality assessments will be pooled. Each criteria pollutant sampler/monitor must be associated with only one PQAO. In some cases, data quality is assessed at the PQAO level.

1.2.1 Each PQAO shall be defined such that measurement uncertainty among all stations in the organization can be expected to be reasonably homogeneous as a result of common factors. Common factors that should be considered in defining PQAOs include:

(a) Operation by a common team of field operators according to a common set of procedures;

(b) Use of a common quality assurance project plan (QAPP) or standard operating procedures;

(c) Common calibration facilities and standards;

(d) Oversight by a common quality assurance organization; and

(e) Support by a common management organization (i.e., state agency) or laboratory.

Since data quality assessments are made and data certified at the PQAO level, the monitoring organization identified as the PQAO will be responsible for the oversight of the quality of data of all monitoring organizations within the PQAO.

1.2.2 Monitoring organizations having difficulty describing its PQAO or in assigning specific monitors to primary quality assurance organizations should consult with the appropriate EPA Regional Office. Any consolidation of monitoring organizations to PQAOs shall be subject to final approval by the appropriate EPA Regional Office.

1.2.3 Each PQAO is required to implement a quality system that provides sufficient information to assess the quality of the monitoring data. The quality system must, at a minimum, include the specific requirements described in this appendix. Failure to conduct or pass a required check or procedure, or a series of required checks or procedures, does not by itself invalidate data for regulatory decision making. Rather, PQAOs and the EPA shall use the checks and procedures required in this appendix in combination with other data quality information, reports, and similar documentation that demonstrate overall compliance with Part 58. Accordingly, the EPA and PQAOs shall use a “weight of evidence” approach when determining the suitability of data for regulatory decisions. The EPA reserves the authority to use or not use monitoring data submitted by a monitoring organization when making regulatory decisions based on the EPA's assessment of the quality of the data. Consensus built validation templates or validation criteria already approved in QAPPs should be used as the basis for the weight of evidence approach.

1.3 Definitions.

(a) Measurement Uncertainty. A term used to describe deviations from a true concentration or estimate that are related to the measurement process and not to spatial or temporal population attributes of the air being measured.

(b) Precision. A measurement of mutual agreement among individual measurements of the same property usually under prescribed similar conditions, expressed generally in terms of the standard deviation.

(c) Bias. The systematic or persistent distortion of a measurement process which causes errors in one direction.

(d) Accuracy. The degree of agreement between an observed value and an accepted reference value. Accuracy includes a combination of random error (imprecision) and systematic error (bias) components which are due to sampling and analytical operations.

(e) Completeness. A measure of the amount of valid data obtained from a measurement system compared to the amount that was expected to be obtained under correct, normal conditions.

(f) Detection Limit. The lowest concentration or amount of target analyte that can be determined to be different from zero by a single measurement at a stated level of probability.

1.4 Measurement Quality Checks. The measurement quality checks described in section 3 of this appendix shall be reported to AQS and are included in the data required for certification.

1.5 Assessments and Reports. Periodic assessments and documentation of data quality are required to be reported to the EPA. To provide national uniformity in this assessment and reporting of data quality for all networks, specific assessment and reporting procedures are prescribed in detail in sections 3, 4, and 5 of this appendix. On the other hand, the selection and extent of the quality assurance and quality control activities used by a monitoring organization depend on a number of local factors such as field and laboratory conditions, the objectives for monitoring, the level of data quality needed, the expertise of assigned personnel, the cost of control procedures, pollutant concentration levels, etc. Therefore, quality system requirements in section 2 of this appendix are specified in general terms to allow each monitoring organization to develop a quality system that is most efficient and effective for its own circumstances while achieving the data quality objectives described in this appendix.

2. Quality System Requirements

A quality system (reference 1 of this appendix) is the means by which an organization manages the quality of the monitoring information it produces in a systematic, organized manner. It provides a framework for planning, implementing, assessing and reporting work performed by an organization and for carrying out required quality assurance and quality control activities.

2.1 Quality Management Plans and Quality Assurance Project Plans. All PQAOs must develop a quality system that is described and approved in quality management plans (QMP) and QAPPs to ensure that the monitoring results:

(a) Meet a well-defined need, use, or purpose (reference 5 of this appendix);

(b) Provide data of adequate quality for the intended monitoring objectives;

(c) Satisfy stakeholder expectations;

(d) Comply with applicable standards specifications;

(e) Comply with statutory (and other legal) requirements; and

(f) Reflect consideration of cost and economics.

2.1.1 The QMP describes the quality system in terms of the organizational structure, functional responsibilities of management and staff, lines of authority, and required interfaces for those planning, implementing, assessing and reporting activities involving environmental data operations (EDO). The QMP must be suitably documented in accordance with EPA requirements (reference 2 of this appendix), and approved by the appropriate Regional Administrator, or his or her representative. The quality system described in the QMP will be reviewed during the systems audits described in section 2.5 of this appendix. Organizations that implement long-term monitoring programs with EPA funds should have a separate QMP document. Smaller organizations, organizations that do infrequent work with the EPA or have monitoring programs of limited size or scope may combine the QMP with the QAPP if approved by, and subject to any conditions of the EPA. Additional guidance on this process can be found in reference 10 of this appendix. Approval of the recipient's QMP by the appropriate Regional Administrator or his or her representative may allow delegation of authority to the PQAOs independent quality assurance function to review and approve environmental data collection activities adequately described and covered under the scope of the QMP and documented in appropriate planning documents (QAPP). Where a PQAO or monitoring organization has been delegated authority to review and approve their QAPP, an electronic copy must be submitted to the EPA region at the time it is submitted to the PQAO/monitoring organization's QAPP approving authority. The QAPP will be reviewed by the EPA during systems audits or circumstances related to data quality. The QMP submission and approval dates for PQAOs/monitoring organizations must be reported to AQS either by the monitoring organization or the EPA Region.

2.1.2 The QAPP is a formal document describing, in sufficient detail, the quality system that must be implemented to ensure that the results of work performed will satisfy the stated objectives. PQAOs must develop QAPPs that describe how the organization intends to control measurement uncertainty to an appropriate level in order to achieve the data quality objectives for the EDO. The quality assurance policy of the EPA requires every EDO to have a written and approved QAPP prior to the start of the EDO. It is the responsibility of the PQAO/monitoring organization to adhere to this policy. The QAPP must be suitably documented in accordance with EPA requirements (reference 3 of this appendix) and include standard operating procedures for all EDOs either within the document or by appropriate reference. The QAPP must identify each PQAO operating monitors under the QAPP as well as generally identify the sites and monitors to which it is applicable either within the document or by appropriate reference. The QAPP submission and approval dates must be reported to AQS either by the monitoring organization or the EPA Region.

2.1.3 The PQAO/monitoring organization's quality system must have adequate resources both in personnel and funding to plan, implement, assess and report on the achievement of the requirements of this appendix and it's approved QAPP.

2.2 Independence of Quality Assurance. The PQAO must provide for a quality assurance management function, that aspect of the overall management system of the organization that determines and implements the quality policy defined in a PQAO's QMP. Quality management includes strategic planning, allocation of resources and other systematic planning activities (e.g., planning, implementation, assessing and reporting) pertaining to the quality system. The quality assurance management function must have sufficient technical expertise and management authority to conduct independent oversight and assure the implementation of the organization's quality system relative to the ambient air quality monitoring program and should be organizationally independent of environmental data generation activities.

2.3. Data Quality Performance Requirements.

2.3.1 Data Quality Objectives. The DQOs, or the results of other systematic planning processes, are statements that define the appropriate type of data to collect and specify the tolerable levels of potential decision errors that will be used as a basis for establishing the quality and quantity of data needed to support the monitoring objectives (reference 5 of this appendix). The DQOs will be developed by the EPA to support the primary regulatory objectives for each criteria pollutant. As they are developed, they will be added to the regulation. The quality of the conclusions derived from data interpretation can be affected by population uncertainty (spatial or temporal uncertainty) and measurement uncertainty (uncertainty associated with collecting, analyzing, reducing and reporting concentration data). This appendix focuses on assessing and controlling measurement uncertainty.

2.3.1.1 Measurement Uncertainty for Automated and Manual PM 2.5 Methods. The goal for acceptable measurement uncertainty is defined for precision as an upper 90 percent confidence limit for the coefficient of variation (CV) of 10 percent and ±10 percent for total bias.

2.3.1.2 Measurement Uncertainty for Automated O 3 Methods. The goal for acceptable measurement uncertainty is defined for precision as an upper 90 percent confidence limit for the CV of 7 percent and for bias as an upper 95 percent confidence limit for the absolute bias of 7 percent.

2.3.1.3 Measurement Uncertainty for Pb Methods. The goal for acceptable measurement uncertainty is defined for precision as an upper 90 percent confidence limit for the CV of 20 percent and for bias as an upper 95 percent confidence limit for the absolute bias of 15 percent.

2.3.1.4 Measurement Uncertainty for NO 2. The goal for acceptable measurement uncertainty is defined for precision as an upper 90 percent confidence limit for the CV of 15 percent and for bias as an upper 95 percent confidence limit for the absolute bias of 15 percent.

2.3.1.5 Measurement Uncertainty for SO 2. The goal for acceptable measurement uncertainty for precision is defined as an upper 90 percent confidence limit for the CV of 10 percent and for bias as an upper 95 percent confidence limit for the absolute bias of 10 percent.

2.4 National Performance Evaluation Programs. The PQAO shall provide for the implementation of a program of independent and adequate audits of all monitors providing data for NAAQS compliance purposes including the provision of adequate resources for such audit programs. A monitoring plan (or QAPP) which provides for PQAO participation in the EPA's National Performance Audit Program (NPAP), the PM2.5 Performance Evaluation Program (PM2.5-PEP) program and the Pb Performance Evaluation Program (Pb-PEP) and indicates the consent of the PQAO for the EPA to apply an appropriate portion of the grant funds, which the EPA would otherwise award to the PQAO for these QA activities, will be deemed by the EPA to meet this requirement. For clarification and to participate, PQAOs should contact either the appropriate EPA regional quality assurance (QA) coordinator at the appropriate EPA Regional Office location, or the NPAP coordinator at the EPA Air Quality Assessment Division, Office of Air Quality Planning and Standards, in Research Triangle Park, North Carolina. The PQAOs that plan to implement these programs (self-implement) rather than use the federal programs must meet the adequacy requirements found in the appropriate sections that follow, as well as meet the definition of independent assessment that follows.

2.4.1 Independent assessment. An assessment performed by a qualified individual, group, or organization that is not part of the organization directly performing and accountable for the work being assessed. This auditing organization must not be involved with the generation of the ambient air monitoring data. An organization can conduct the performance evaluation (PE) if it can meet this definition and has a management structure that, at a minimum, will allow for the separation of its routine sampling personnel from its auditing personnel by two levels of management. In addition, the sample analysis of audit filters must be performed by a laboratory facility and laboratory equipment separate from the facilities used for routine sample analysis. Field and laboratory personnel will be required to meet PE field and laboratory training and certification requirements to establish comparability to federally implemented programs.