['Recruiting and hiring']

['Recruiting and hiring']

05/18/2022

...

Testing and Assessment: An Employer’s Guide to Good Practices

This chapter describes some of the most common assessment instrument scoring procedures. It also discusses how to properly interpret results, and how to use them effectively. Other issues regarding the proper use of assessment tools are also discussed.

Chapter Highlights

- Assessment instrument scoring procedures

- Test interpretation methods: norm and criterion-referenced tests

- Interpreting test results

- Processing test results to make employment decisions-rank-ordering and cut-off scores

- Combining information from many assessment tools

- Minimizing adverse impact

Principle of Assessment

| Ensure that scores are interpreted properly. |

1. Assessment instrument scoring procedures

Test publishers may offer one or more ways to score the tests you purchase. Available options may range from hand scoring by your staff to machine scanning and scoring done by the publisher. All options have their advantages and disadvantages. When you select the tests for use, investigate the available scoring options. Your staff's time, turnaround time for test results, and cost may all play a part in your purchasing decision.

- Hand scoring. The answer sheet is scored by counting the number of correct responses, often with the aid of a stencil. These scores may then have to be converted from the raw score count to a form that is more meaningful, such as a percentile or standard score. Staff must be trained on proper hand scoring procedures and raw score conversion. This method is more prone to error than machine scoring. To improve accuracy, scoring should be double checked. Hand scoring a test may take more time and effort, but it may also be the least expensive method when there are only a small number of tests to score.

- Computer-based scoring. Tests can be scored using a computer and test scoring software purchased from the test publisher. When the test is administered in a paper-and-pencil format, raw scores and identification information must be key-entered by staff following the completion of the test session. Converted scores and interpretive reports can be printed immediately. When the test is administered on the computer, scores are most often generated automatically upon completion of the test; there is no need to key-enter raw scores or identifying information. This is one of the major advantages of computer-based testing.

- Optical scanning. Machine scorable answer sheets are now readily available for many multiple choice tests. They are quickly scanned and scored by an optical mark reader. You may be able to score these answer sheets in-house or send them to the test publisher for scoring.

- On-site. You will need a personal computer system (computer, monitor, and printer), an optical reader, and special test scoring software from the publisher. Some scanning programs not only generate test scores but also provide employers with individual or group interpretive reports. Scanning systems can be costly, and the staff must learn to operate the scanner and the computer program that does the test scoring and reporting. However, using a scanner is much more efficient than hand scoring, or key-entering raw scores when testing volume is heavy.

- Mail-in and fax scoring. In many cases the completed machine-scannable answer sheets can be mailed or faxed to the test publisher. The publisher scores the answer sheets and returns the scores and test reports to the employer. Test publishers generally charge a fee for each test scored and for each report generated.

For mail-in service, there is a delay of several days between mailing answer sheets and receipt of the test results from the service. Overnight mail by private or public carrier will shorten the wait but will add to the cost. Some publishers offer a scoring service by fax machine. This will considerably shorten the turn-around time, but greater care must be taken to protect the confidentiality of the results.

2. Test interpretation methods: norm and criterion-referenced tests

Employment tests are used to make inferences about people's characteristics, capabilities, and likely future performance on the job. What does the test score mean? Is the applicant qualified? To help answer these questions, consider what the test is designed to accomplish. Does the test compare one person's score to those obtained by others in the occupation, or does it measure the absolute level of skill an individual has obtained? These two methods are described below.

- Norm-referenced test interpretation. In norm-referenced test interpretation, the scores that the applicant receives are compared with the test performance of a particular reference group. In this case the reference group is the norm group. The norm group generally consists of large representative samples of individuals from specific populations, such as high school students, clerical workers, or electricians. It is their average test performance and the distribution of their scores that set the standard and become the test norms of the group.

The test manual will usually provide detailed descriptions of the norm groups and the test norms. To ensure valid scores and meaningful interpretation of norm-referenced tests, make sure that your target group is similar to the norm group. Compare the educational level, the occupational, language and cultural backgrounds, and other demographic characteristics of the individuals making up the two groups to determine their similarity.

For example, consider an accounting knowledge test that was standardized on the scores obtained by employed accountants with at least 5 years of experience. This would be an appropriate test if you are interested in hiring experienced accountants. However, this test would be inappropriate if you are looking for an accounting clerk. You should look for a test normed on accounting clerks or a closely related occupation. - Criterion-referenced test interpretation. In criterion-referenced tests, the test score indicates the amount of skill or knowledge the test taker possesses in a particular subject or content area. The test score is not used to indicate how well the person does compared to others; it relates solely to the test taker's degree of competence in the specific area assessed. Criterion-referenced assessment is generally associated with educational and achievement testing, licensing, and certification.

A particular test score is generally chosen as the minimum acceptable level of competence. How is a level of competence chosen? The test publisher may develop a mechanism that converts test scores into proficiency standards, or the company may use its own experience to relate test scores to competence standards.

For example, suppose your company needs clerical staff with word processing proficiency. The test publisher may provide you with a conversion table relating word processing skill to various levels of proficiency, or your own experience with current clerical employees can help you to determine the passing score. You may decide that a minimum of 35 words per minute with no more than two errors per 100 words is sufficient for a job with occasional word processing duties. If you have a job with high production demands, you may wish to set the minimum at 75 words per minute with no more than 1 error per 100 words.

It is important to ensure that all inferences you make on the basis of test results are well founded. Only use tests for which sufficient information is available to guide and support score interpretation. Read the test manual for instructions on how to properly interpret the test results. This leads to the next principle of assessment.

Principle of Assessment

| Ensure that scores are interpreted properly. |

3. Interpreting test results

Test results are usually presented in terms of numerical scores, such as raw scores, standard scores, and percentile scores. In order to interpret test scores properly, you need to understand the scoring system used.

- Types of scores

- Raw scores. These refer to the unadjusted scores on the test. Usually the raw score represents the number of items answered correctly, as in mental ability or achievement tests. Some types of assessment tools, such as work value inventories and personality inventories, have no "right" or "wrong" answers. In such cases, the raw score may represent the number of positive responses for a particular trait.

Raw scores do not provide much useful information. Consider a test taker who gets 25 out of 50 questions correct on a math test. It's hard to know whether "25" is a good score or a poor score. When you compare the results to all the other individuals who took the same test, you may discover that this was the highest score on the test.

In general, for norm-referenced tests, it is important to see where a particular score lies within the context of the scores of other people. Adjusting or converting raw scores into standard scores or percentiles will provide you with this kind of information. For criterion-referenced tests, it is important to see what a particular score indicates about proficiency or competence. - Standard scores. Standard scores are converted raw scores. They indicate where a person's score lies in comparison to a reference group. For example, if the test manual indicates that the average or mean score for the group on a test is 50, then an individual who gets a higher score is above average, and an individual who gets a lower score is below average. Standard scores are discussed in more detail below in the section on standard score distributions.

- Percentile score. A percentile score is another type of converted score. An individual's raw score is converted to a number indicating the percent of people in the reference group who scored below the test taker. For example, a score at the 70th percentile means that the individual's score is the same as or higher than the scores of 70% of those who took the test. The 50th percentile is known as the median and represents the middle score of the distribution.

- Raw scores. These refer to the unadjusted scores on the test. Usually the raw score represents the number of items answered correctly, as in mental ability or achievement tests. Some types of assessment tools, such as work value inventories and personality inventories, have no "right" or "wrong" answers. In such cases, the raw score may represent the number of positive responses for a particular trait.

- Score distribution

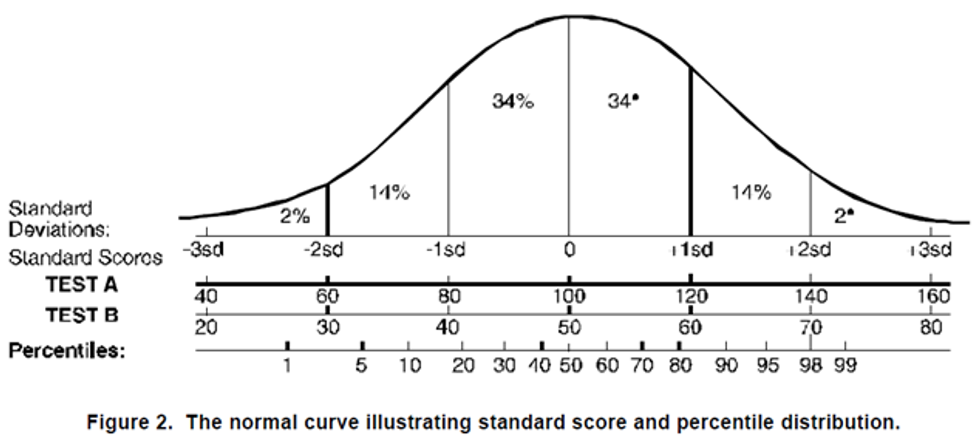

- Normal curve. A great many human characteristics, such as height, weight, math ability, and typing skill, are distributed in the population at large in a typical pattern. This pattern of distribution is known as the normal curve and has a symmetrical bell-shaped appearance. The curve is illustrated in Figure 2. As you can see, a large number of individual cases cluster in the middle of the curve. The farther from the middle or average you go, the fewer the cases. In general, distributions of test scores follow the same normal curve pattern. Most individuals get scores in the middle range. As the extremes are approached, fewer and fewer cases exist, indicating that progressively fewer individuals get low scores (left of center) and high scores (right of center).

- Standard score distribution. There are two characteristics of a standard score distribution that are reported in test manuals. One is the mean, a measure of central tendency; the other is the standard deviation, a measure of the variability of the distribution.

- Mean. The most commonly used measure of central tendency is the mean or arithmetic average score. Test developers generally assign an arbitrary number to represent the mean standard score when they convert from raw scores to standard scores. Look at Figure 2. Test A and Test B are two tests with different standard score means. Notice that Test A has a mean of 100 and Test B has a mean of 50. If an individual got a score of 50 on Test A, that person did very poorly. However, a score of 50 on Test B would be an average score.

- Standard deviation. The standard deviation is the most commonly used measure of variability. It is used to describe the distribution of scores around the mean. Figure 2 shows the percent of cases 1, 2, and 3 standard deviations (sd) above the mean and 1, 2, and 3 standard deviations below the mean. As you can see, 34% of the cases lie between the mean and 1 sd, and 34% of the cases lie between the mean and -1 sd. Thus, approximately 68% of the cases lie between -1 and 1 standard deviations. Notice that for Test A, the standard deviation is 20, and 68% of the test takers score between 80 and 120. For Test B the standard deviation is 10, and 68% of the test takers score between 40 and 60. 7-5

- Percentile distribution. The bottom horizontal line below the curve in Figure 2 is labeled "Percentiles." It represents the distribution of scores in percentile units. Notice that the median is in the same position as the mean on the normal curve. By knowing the percentile score of an individual, you already know how that individual compares with others in the group. An individual at the 98th percentile scored the same or better than 98% of the individuals in the group. This is equivalent to getting a standard score of 140 on Test A or 70 on Test B.

- Normal curve. A great many human characteristics, such as height, weight, math ability, and typing skill, are distributed in the population at large in a typical pattern. This pattern of distribution is known as the normal curve and has a symmetrical bell-shaped appearance. The curve is illustrated in Figure 2. As you can see, a large number of individual cases cluster in the middle of the curve. The farther from the middle or average you go, the fewer the cases. In general, distributions of test scores follow the same normal curve pattern. Most individuals get scores in the middle range. As the extremes are approached, fewer and fewer cases exist, indicating that progressively fewer individuals get low scores (left of center) and high scores (right of center).

4. Processing test results to make employment decisions-rank-ordering and cut-off scores

The rank-ordering of test results, the use of cut-off scores, or some combination of the two is commonly used to assess the qualifications of people and to make employment-related decisions about them. These are described below.

Rank-ordering is a process of arranging candidates on a list from highest score to lowest score based on their test results. In rank-order selection, candidates are chosen on a top-down basis.

A cut-off score is the minimum score that a candidate must have to qualify for a position. Employers generally set the cut-off score at a level which they determine is directly related to job success. Candidates who score below this cut-off generally are not considered for selection. Test publishers typically recommend that employers base their selection of a cut-off score on the norms of the test.

5. Combining information from many assessment tools

Many assessment programs use a variety of tests and procedures in their assessment of candidates. In general, you can use a "multiple hurdles" approach or a "total assessment" approach, or a combination of the two, in using the assessment information obtained.

- Multiple hurdles approach. In this approach, test takers must pass each test or procedure (usually by scoring above a cut-off score) to continue within the assessment process. The multiple hurdles approach is appropriate and necessary in certain situations, such as requiring test takers to pass a series of tests for licensing or certification, or requiring all workers in a nuclear power plant to pass a safety test. It may also be used to reduce the total cost of assessment by administering less costly screening devices to everyone, but having only those who do well take the more expensive tests or other assessment tools.

- Total assessment approach. In this approach, test takers are administered every test and procedure in the assessment program. The information gathered is used in a flexible or counterbalanced manner. This allows a high score on one test to be counterbalanced with a low score on another. For example, an applicant who performs poorly on a written test, but shows great enthusiasm for learning and is a very hard worker, may still be an attractive hire.

A key decision in using the total assessment approach is determining the relative weights to assign to each assessment instrument in the program.

Figure 3 is a simple example of how assessment results from several tests and procedures can be combined to generate a weighted composite score.

| Assessment instrument | Assessment score (0-100) | Assigned weight | Weighted total |

| Interview | 80 | 8 | 640 |

| Mechanical ability test | 60 | 10 | 600 |

| H.S. course work | 90 | 5 | 450 |

| Total Score: 1,690 | |||

An employer is hiring entry-level machinists. The assessment instruments consist of a structured interview, a mechanical ability test, and high school course work. After consultation with relevant staff and experts, a weight of 8 is assigned for the interview, 10 for the test, and 5 for course work. A sample score sheet for one candidate, Candidate A, is shown above. As you can see, although Candidate A scored lowest on the mechanical ability test, the weights of all of the assessment instruments as a composite allowed him/her to continue on as a candidate for the machinist job rather than being eliminated for consideration as a result of the one low score.

Figure 3. Score-sheet for entry level machinist job: Candidate A.

6. Minimizing adverse impact

A well-designed assessment program will improve your ability to make effective employment decisions. However, some of the best predictors of job performance may exhibit adverse impact. As a test user, there are several good testing practices to follow to minimize adverse impact in conducting personnel assessment and to ensure that, if adverse impact does occur, it is not a result of deficiencies in your assessment tools.

- Be clear about what needs to be measured, and for what purpose. Use only assessment tools that are job-related and valid, and only use them in the way they were designed to be used.

- Use assessment tools that are appropriate for the target population.

- Do not use assessment tools that are biased or unfair to any group of people.

- Consider whether there are alternative assessment methods that have less adverse impact.

- Consider whether there is another way to use the test that either reduces or is free of adverse impact.

- Consider whether use of a test with adverse impact is necessary. Does the test improve the quality of selections to such an extent that the magnitude of adverse impact is justified by business necessity?

- If you determine that it is necessary to use a test that may result in adverse impact, it is recommended that it be used as only one part of a comprehensive assessment process. That is, apply the whole-person approach to your personnel assessment program. This approach will allow you to improve your assessment of the individual and reduce the effect of differences in average scores between groups on a single test.

['Recruiting and hiring']

['Recruiting and hiring']

UPGRADE TO CONTINUE READING

Load More

J. J. Keller is the trusted source for DOT / Transportation, OSHA / Workplace Safety, Human Resources, Construction Safety and Hazmat / Hazardous Materials regulation compliance products and services. J. J. Keller helps you increase safety awareness, reduce risk, follow best practices, improve safety training, and stay current with changing regulations.

Copyright 2025 J. J. Keller & Associate, Inc. For re-use options please contact copyright@jjkeller.com or call 800-558-5011.